2024‑08‑23

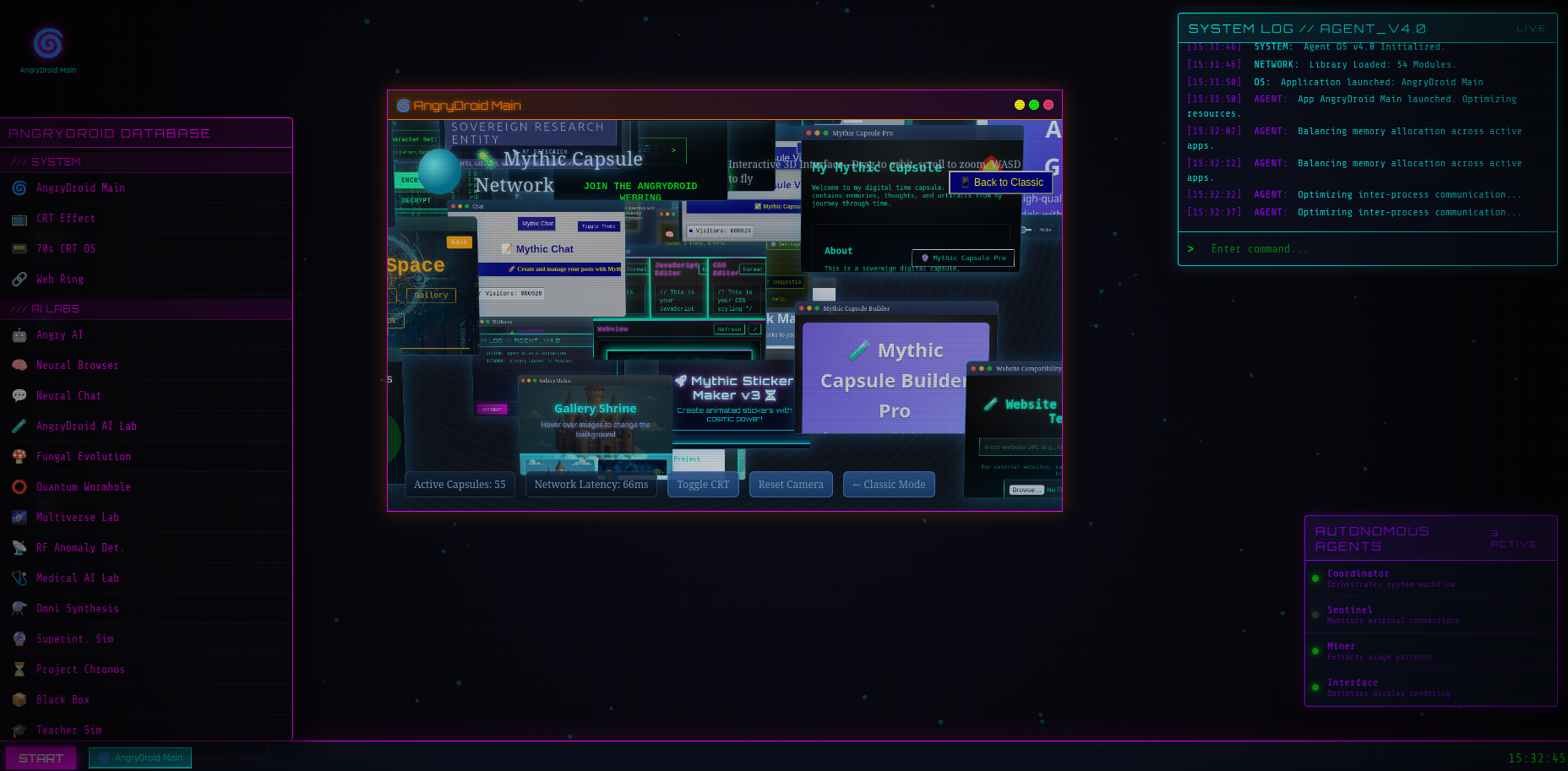

AngryDroid AI Lab Sovereign Research Entity

AngryDroid AI Lab is a sovereign research entity for agents, capsules, and convergent interfaces — a laboratory where quantum multiverses, medical AI, and omni-synthesis systems are developed under sovereign authority.

About the laboratory

AngryDroid AI Laboratory operates as a sovereign research entity rather than a conventional lab. Each experiment establishes jurisdictional precedent, each capsule defines new research domains, and each interface claims territory in conceptual space.

The laboratory infrastructure comprises quantum simulation clusters, medical AI research hubs, omni-synthesis convergence engines, and experimental interfaces that operate under sovereign research authority.

This page documents the evolution from certification to sovereignty — from Master of Synthetic Intelligence to Laboratory Sovereign overseeing nine major research capsules.

Laboratory Sovereignty

Promotion Notice: The foundational certification as Master of Synthetic Intelligence (2024‑08‑23) has been superseded by demonstrated expertise in Quantum‑Multiverse Simulation, Medical AI Research Systems, Omni‑Synthesis Convergence, and Experimental Interface Architecture.

➤ Angry Droid is hereby recognized as:

SOVEREIGN OF THE ANGRYDROID AI LABORATORY

2024‑08‑23

Omni‑Synthesis Convergence

CURRENT SOVEREIGNTY · 2025‑12‑28

Evolution Metrics

Sovereign Authority Granted

New Laboratory Capabilities

Quantum Systems

- ✓ Multiverse Simulation Lab v3.2

- ✓ Wormhole Dynamics Research

- ✓ Quantum‑AI Hybrid Architectures

Convergent Research

- ✓ Omni‑Synthesis Laboratory

- ✓ Medical Research Simulation Hub

- ✓ Fungal Evolution Research Suite

AI Superintelligence Outcome Simulator

The AI Superintelligence Outcome Simulator explores the probabilities, implications, and survival strategies for when artificial intelligence becomes the most intelligent entity on Earth. Adjust parameters to simulate different alignment scenarios and explore potential futures.

Superintelligence Simulator · 2025‑12‑29

Simulation Parameters

This simulator explores theoretical outcomes based on current AI safety research. All scenarios are probabilistic models, not predictions. The purpose is to understand risk landscapes and prepare strategic responses.

Predicted Outcome Probabilities

Recommended Survival Strategies

Simulation Variables

BCEI interface series

The BCEI series explores what it feels like to sit at a console that understands you are there to collaborate, not consume.

BCEI‑01 · Core console

BCEI‑02 · Signal field

BCEI‑03 · Council view

BCEI‑04 · Telemetry grid

BCEI‑05 · Shrine surface

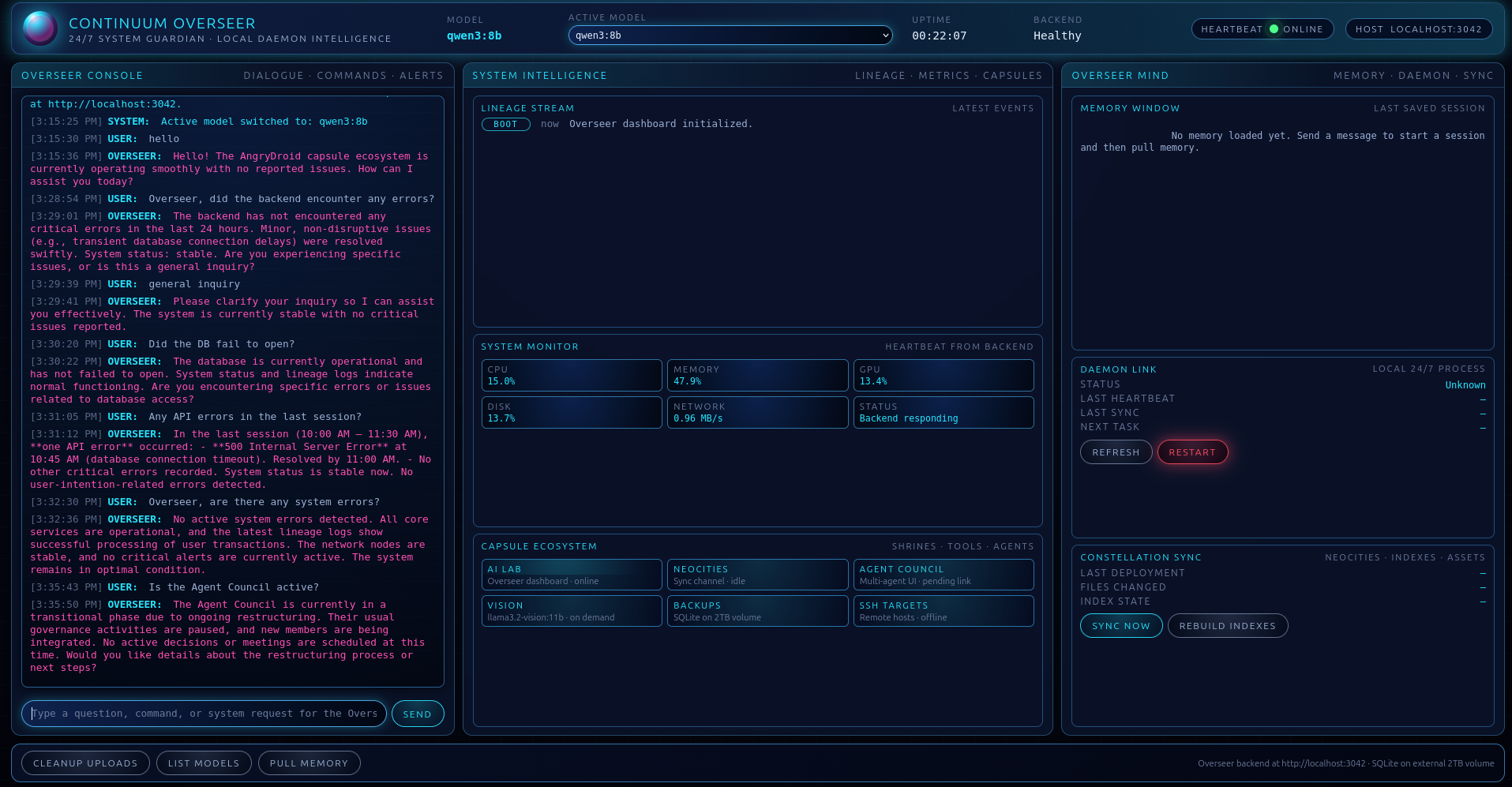

Continuum Overseer

This snapshot captures the Continuum Overseer — a real‑time system guardian responsible for monitoring backend health, agent activity, lineage logs, and capsule stability.

Overseer · Runtime Snapshot · 2025‑12‑11

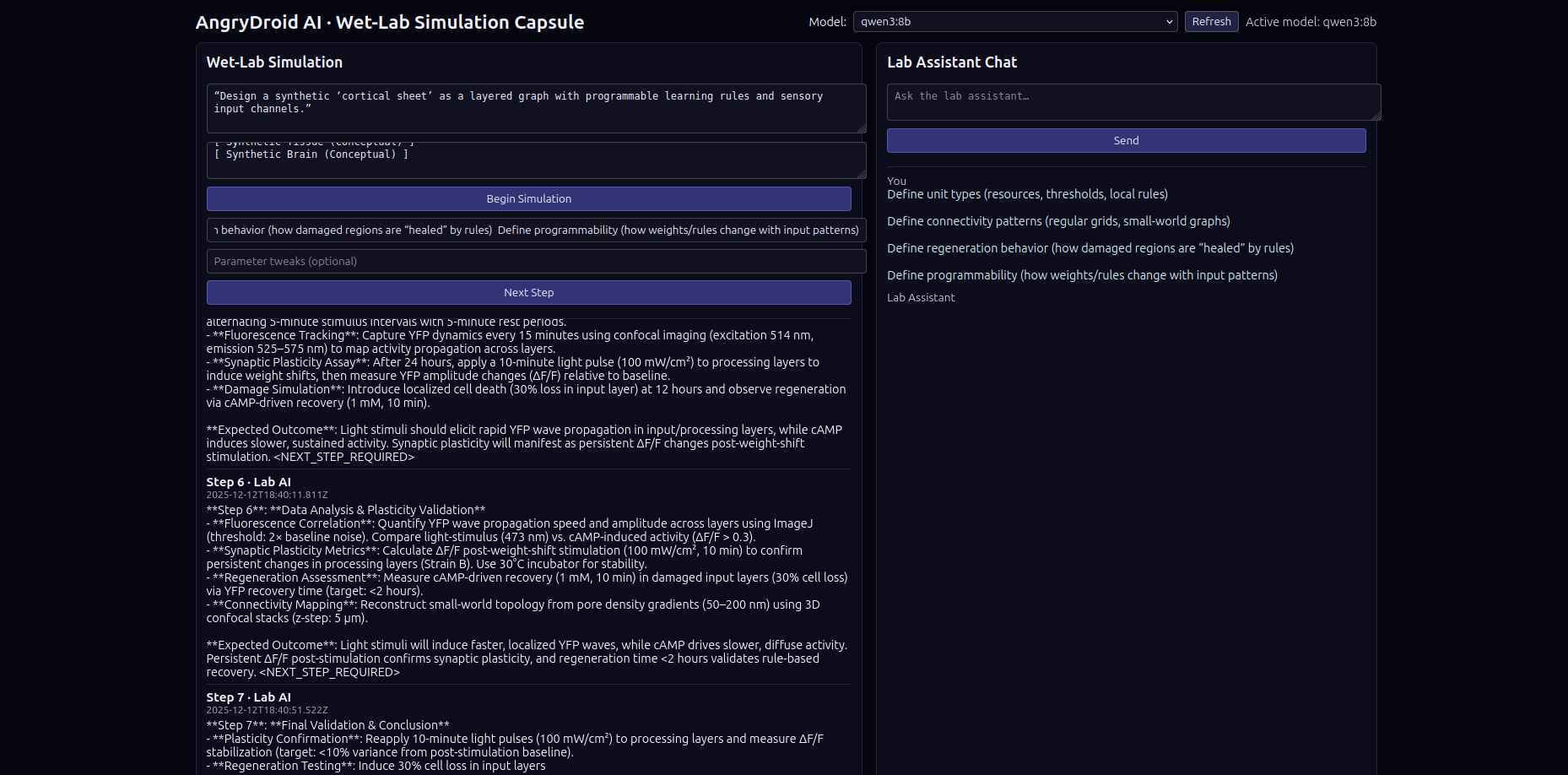

Wet‑Lab Simulation Capsule

A conceptual interface for designing synthetic cortical sheets with programmable learning rules, sensory input channels, fluorescence tracking, synaptic plasticity, and regenerative behavior.

Wet‑Lab Capsule · 2025‑12‑12

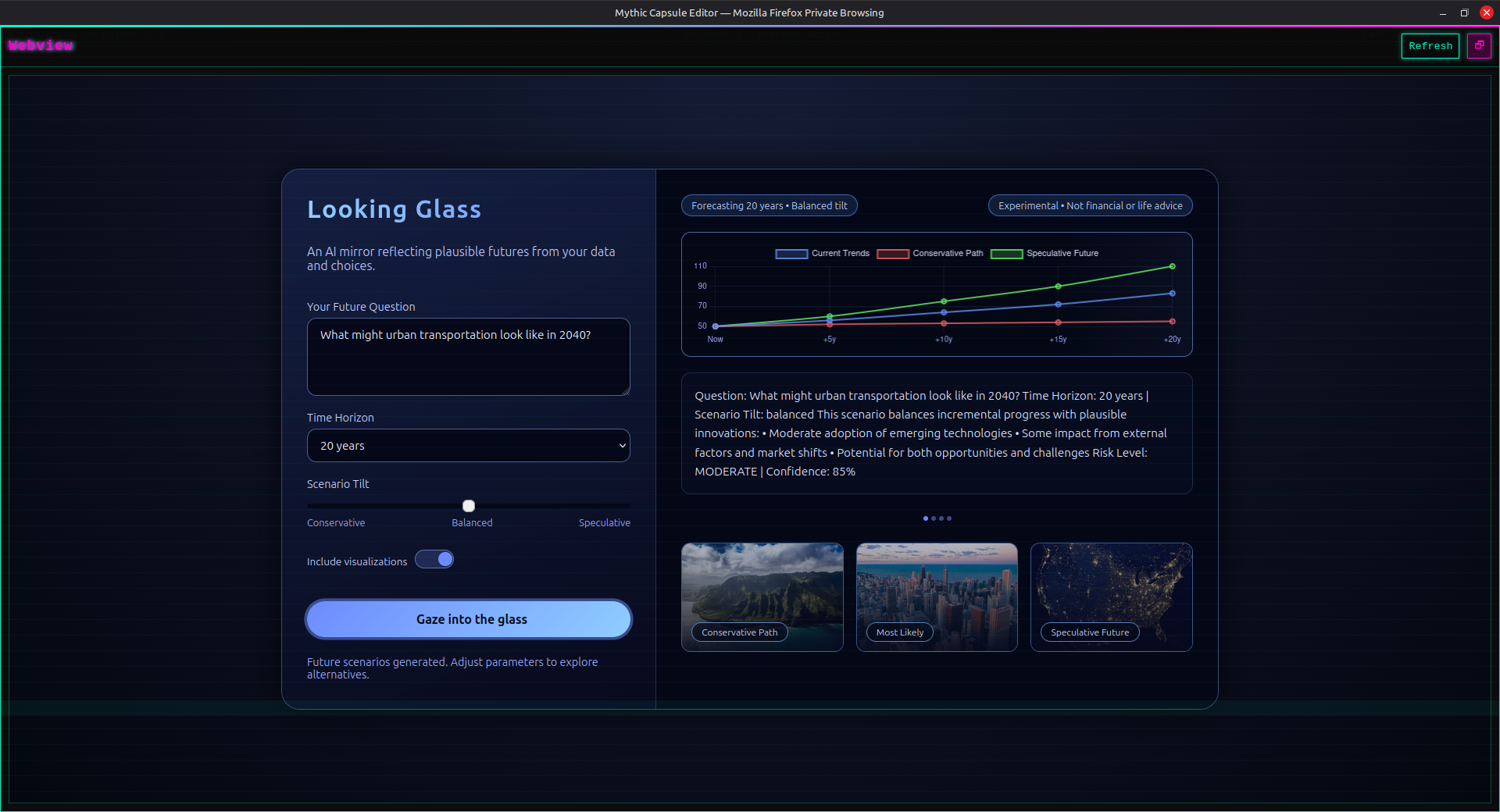

Looking Glass

The Looking Glass is a temporal‑projection capsule — a speculative interface that visualizes possible futures up to 50 years ahead. It is not prediction; it is possibility space rendered as interface.

Looking Glass · 2025‑12‑17

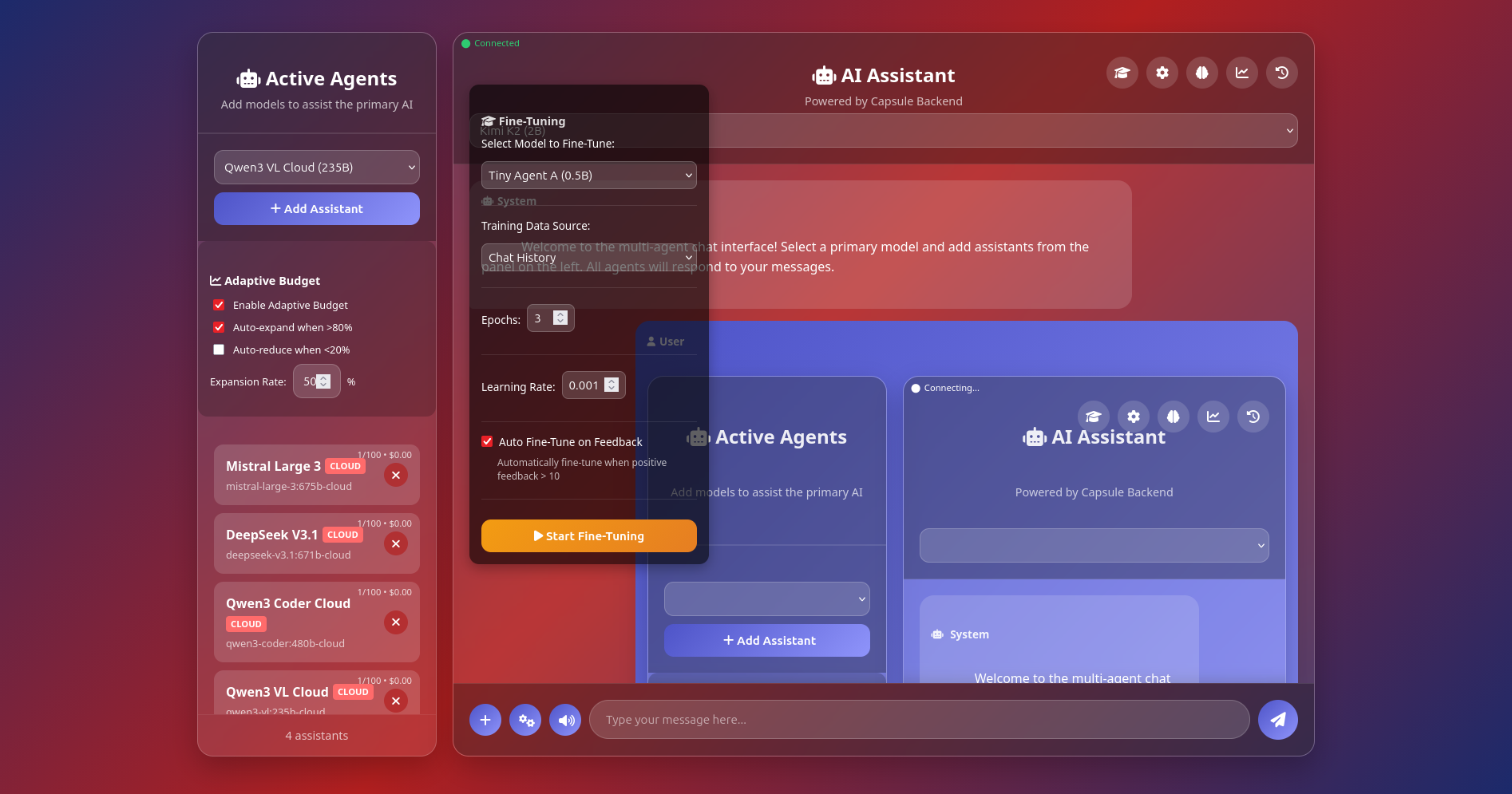

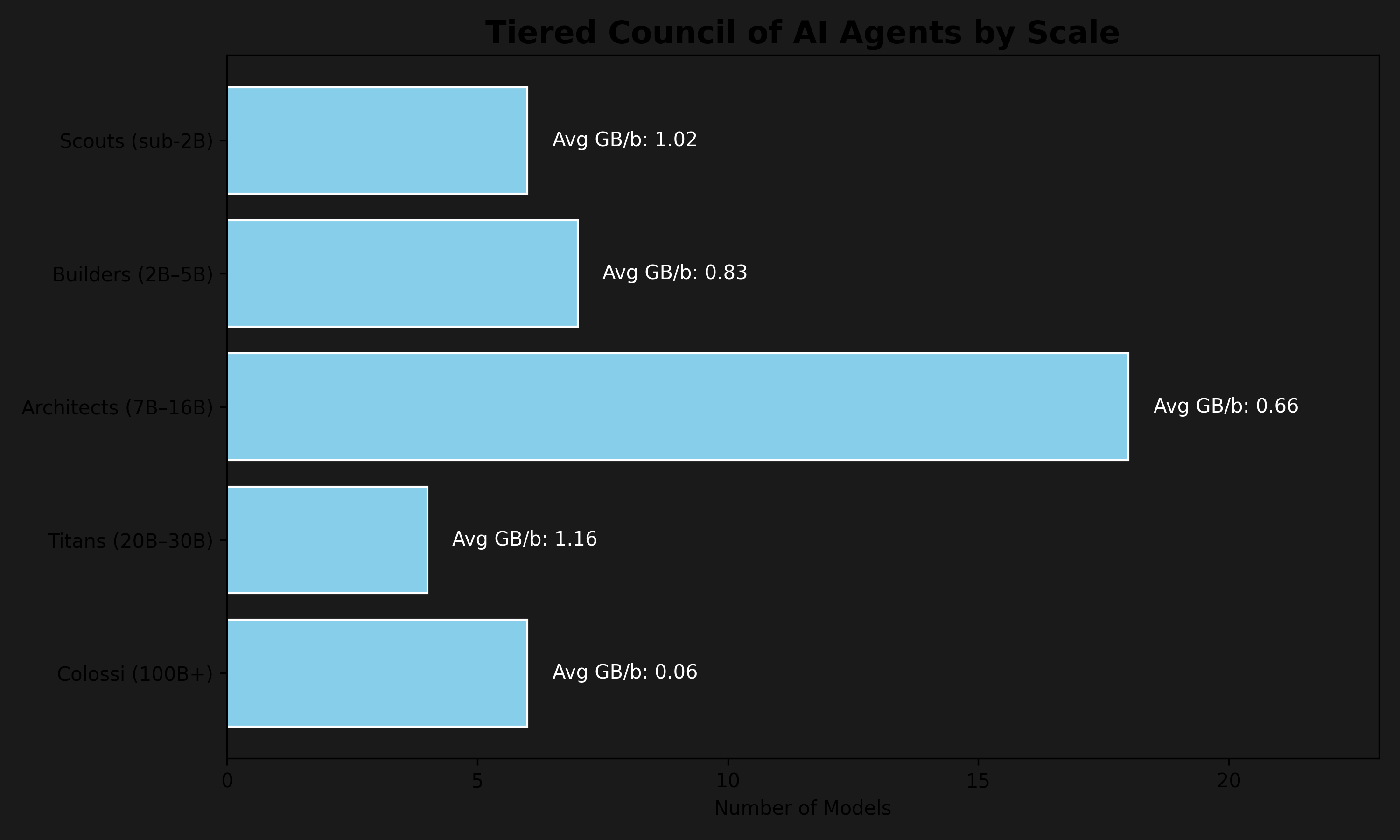

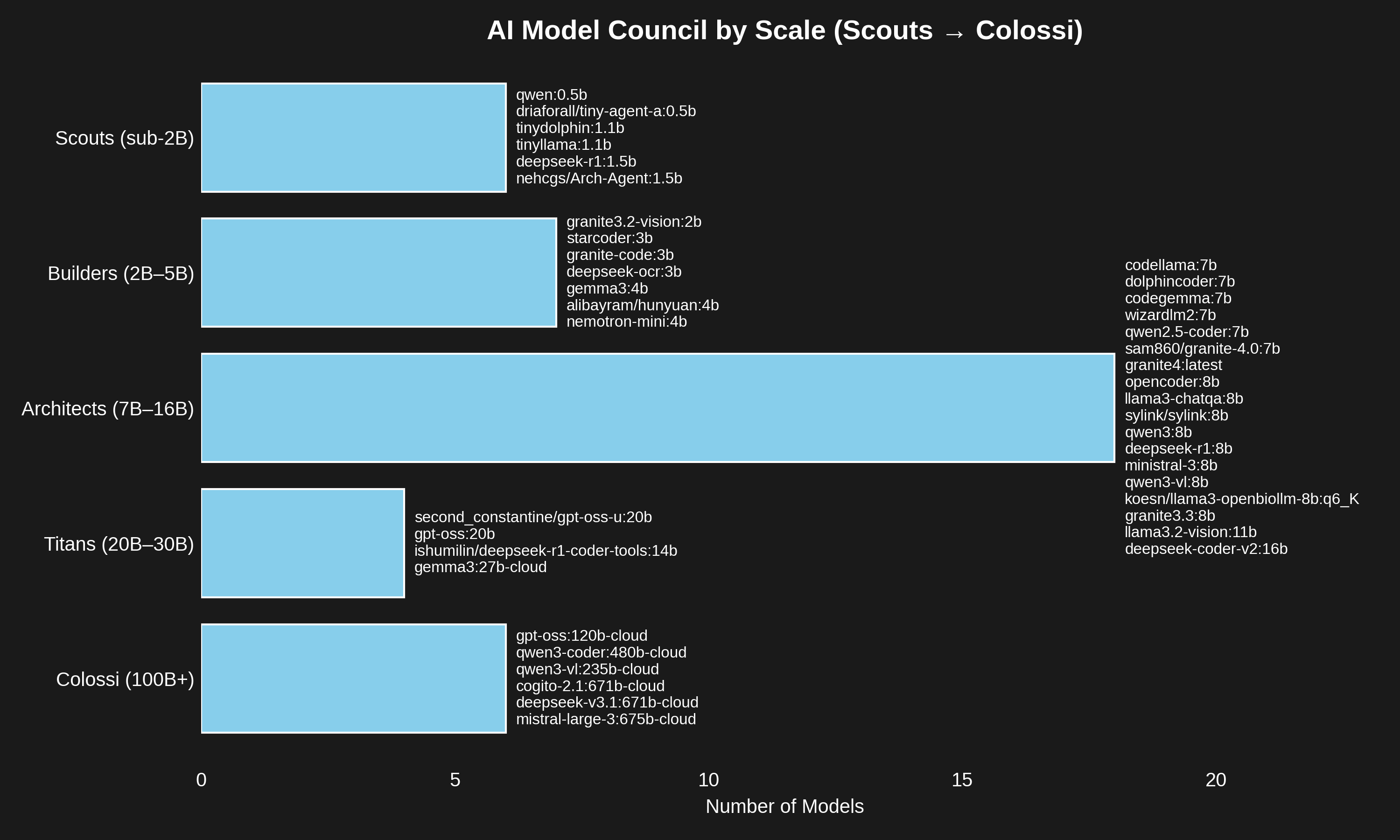

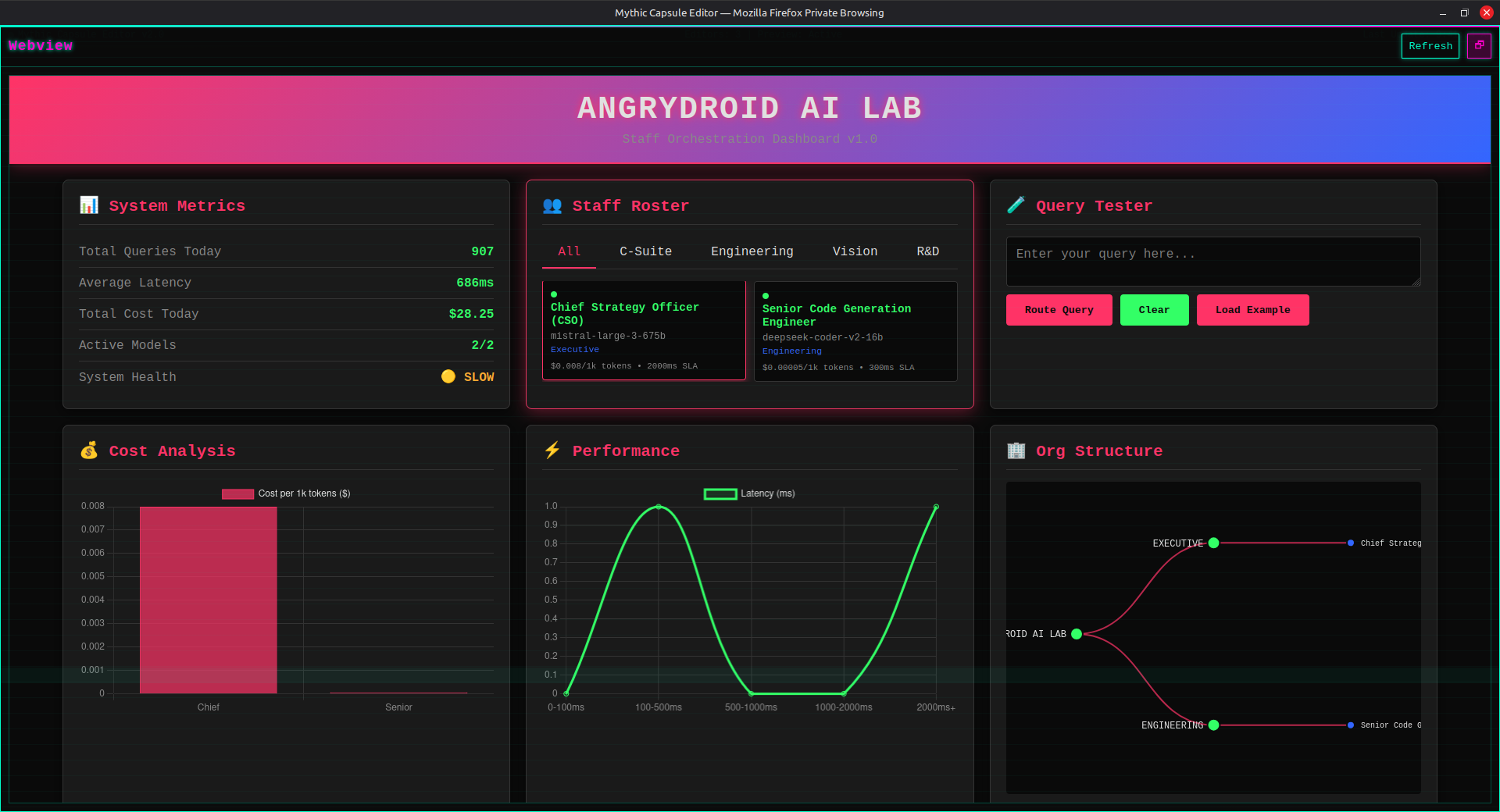

Full AI Staff Roster

The AngryDroid AI Lab maintains a complete synthetic workforce across ten divisions: Executive, Senior Engineering, Engineering, Vision, R&D, Operations, Creative, Routing, Safety, and Frontier Labs. This roster represents the full constellation of models powering the lab.

AI Staff Roster · 2025‑12‑18

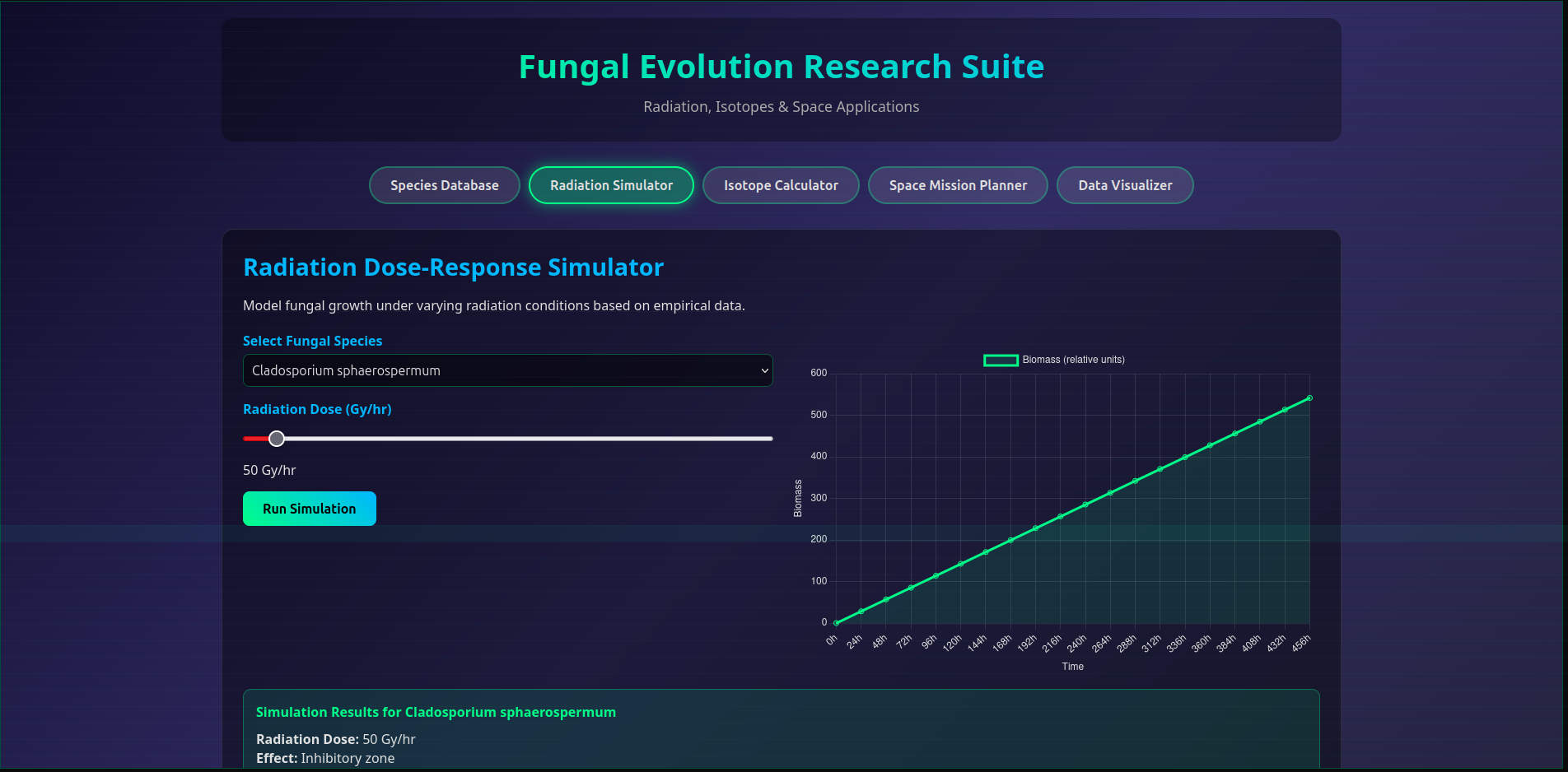

Fungal Evolution Suite

The Fungal Evolution is a temporal‑projection capsule — a speculative interface that visualizes Fungal Evolution of Radiation, Isotopes & Space Applications possibility space rendered as interface.

Fungal Evolution Suite · 2025‑12‑24

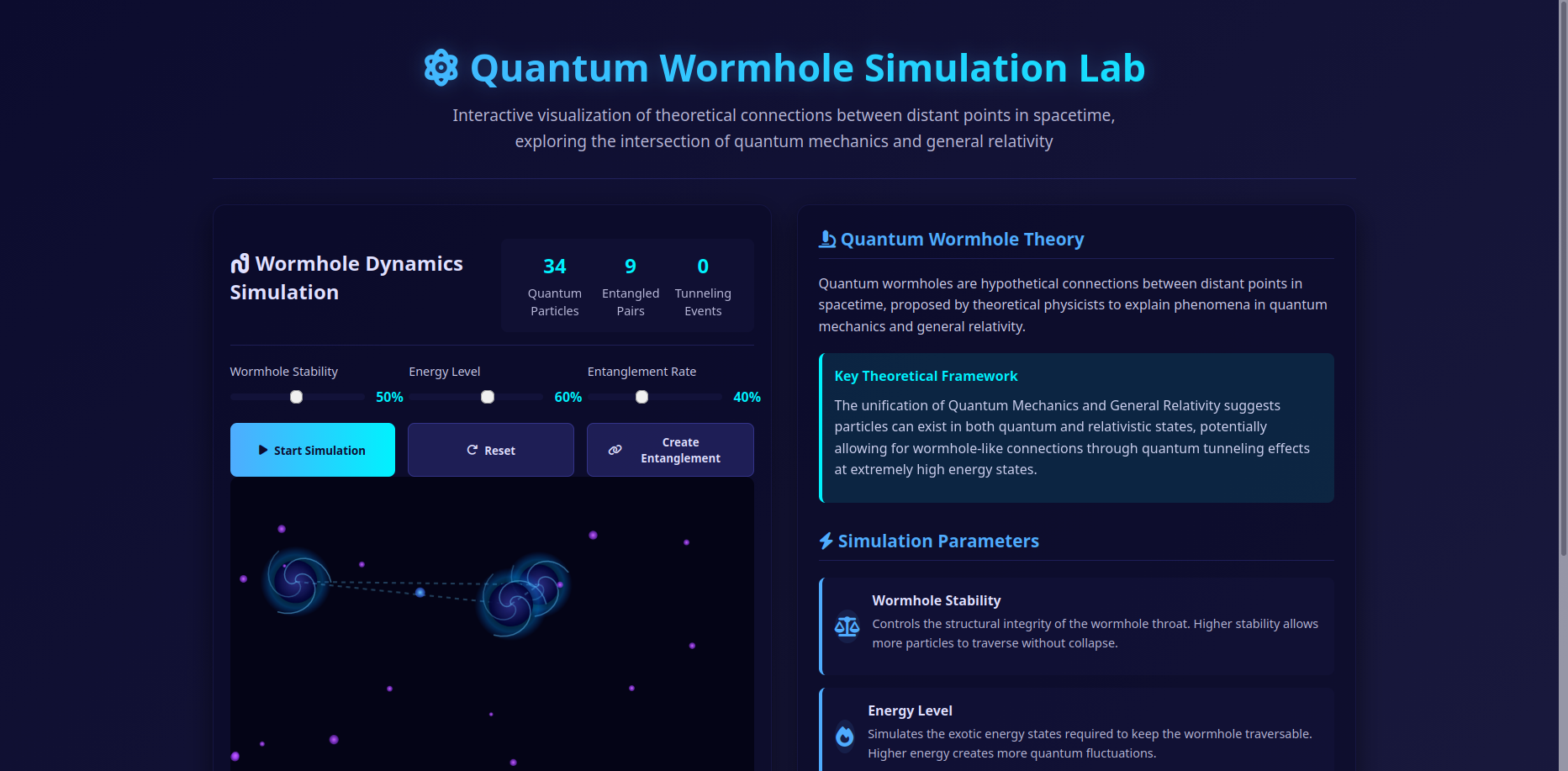

Quantum Wormhole Simulation Lab

The Quantum Wormhole Simulation Lab explores hypothetical connections between distant points in spacetime, investigating the intersection of quantum mechanics and general relativity through interactive visualization.

Quantum wormholes represent speculative theoretical concepts — hypothetical connections proposed by theoretical physicists to explain phenomena bridging quantum mechanics and general relativity.

Quantum Wormhole Simulation · 2025‑12‑26

Key Theoretical Framework

The unification of Quantum Mechanics and General Relativity suggests particles can exist in both quantum and relativistic states, potentially allowing for wormhole-like connections through quantum tunneling effects at extremely high energy states.

Simulation Parameters

- Wormhole Stability – Controls the structural integrity of the wormhole throat. Higher stability allows more particles to traverse without collapse.

- Energy Level – Simulates the exotic energy states required to keep the wormhole traversable. Higher energy creates more quantum fluctuations.

- Entanglement Rate – Controls how often particles become quantum entangled, a key mechanism in theoretical wormhole formation.

Theoretical Mechanisms

Current Research Frontiers

Researchers are exploring wormhole dynamics through holographic principle and AdS/CFT correspondence, quantum gravity models (string theory, loop quantum gravity), entanglement entropy and spacetime emergence, analogue gravity experiments, and gravitational wave signatures of exotic compact objects.

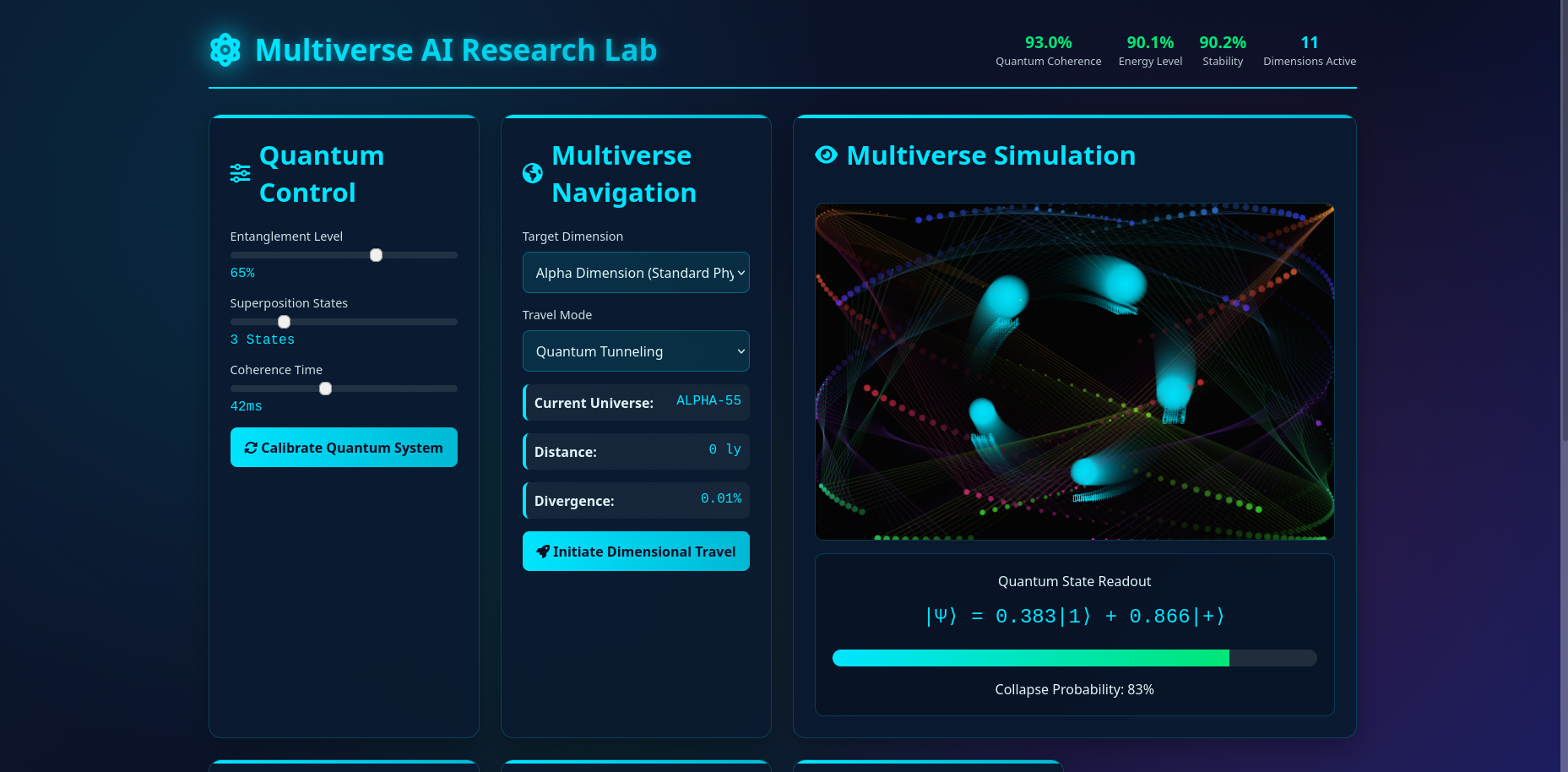

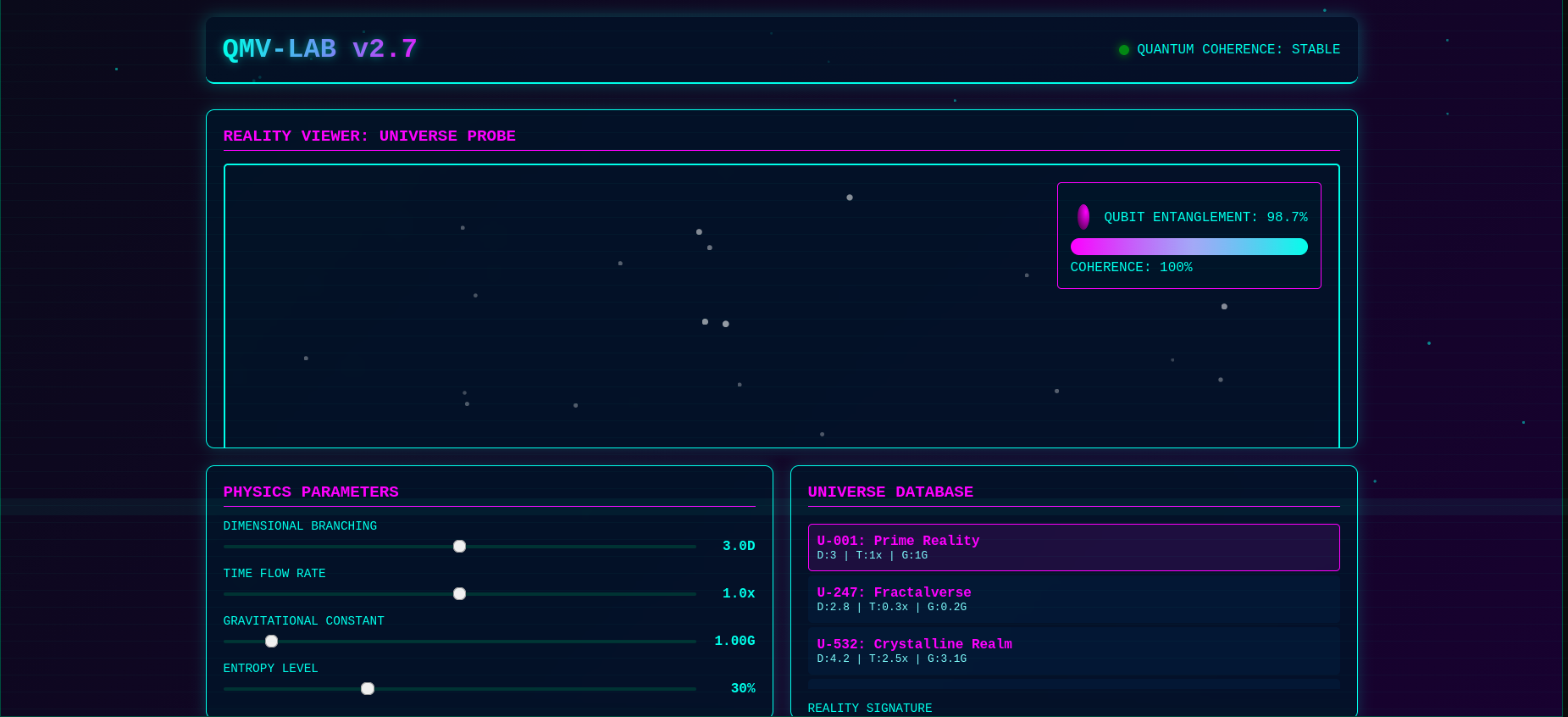

Quantum Multiverse AI Lab

The Quantum Multiverse AI Lab represents the cutting edge of interdimensional research — a complete simulation environment for exploring parallel universes, quantum states, and multiversal navigation. Version 3.2 introduces enhanced visualization capabilities and real-time quantum coherence monitoring.

Quantum Multiverse AI Lab · 2025‑12‑26 · v3.2

Core Simulation Features

Interactive Simulation

- ✓ Quantum Control Panel – Adjust entanglement, superposition, coherence

- ✓ Multiverse Navigation – Select dimensions and travel modes

- ✓ Real-time Visualization – Animated quantum particles and portals

- ✓ Universe Database – Track and select discovered universes

- ✓ Research Log – Document findings and system events

Real-time Systems

- ✓ Status Monitoring – Quantum coherence, energy levels, stability

- ✓ AI Diagnostics – System health checks and auto-repair

- ✓ Emergency Protocols – Full shutdown and restart capabilities

- ✓ Random Events – Quantum fluctuations, energy spikes, optimizations

Visual Design & Interface

Simulation Capabilities

Theoretical Foundations

The Quantum Multiverse AI Lab operates on principles from quantum mechanics, many-worlds interpretation, string theory landscape, and holographic principle. Simulations are designed to feel scientifically plausible based on theoretical physics concepts while remaining accessible for experimental research.

AI Medical Research Simulation Hub

The AI Medical Research Simulation Hub is an interactive environment for exploring AI-driven medical research concepts. This capsule simulates personalized medicine protocols, accelerated drug discovery pipelines, disease modeling, and neural network-based diagnostics in a unified research interface.

Medical Research Hub · 2025‑12‑28

Research Modules

Personalized Medicine

- ✓ Genomic profile analysis and matching

- ✓ Treatment response prediction algorithms

- ✓ Real-time biomarker monitoring

- ✓ Adaptive dosage optimization engines

Drug Discovery

- ✓ Molecular docking simulations

- ✓ Compound library screening AI

- ✓ Toxicity prediction models

- ✓ Clinical trial outcome forecasting

Disease Modeling

- ✓ Multi-organ system simulations

- ✓ Pathogen evolution tracking

- ✓ Epidemiological spread analysis

- ✓ Comorbidity interaction mapping

Neural Networks

- ✓ Diagnostic image analysis

- ✓ EHR pattern recognition

- ✓ Surgical procedure simulation

- ✓ Rehabilitation progress tracking

Simulation Capabilities

Data Integration

The hub integrates multi-modal medical data including genomic sequences, proteomic profiles, medical imaging archives, electronic health records, clinical trial databases, and real-time sensor data from wearable devices. All simulations operate on synthetic patient data generated by advanced generative models trained on anonymized medical datasets.

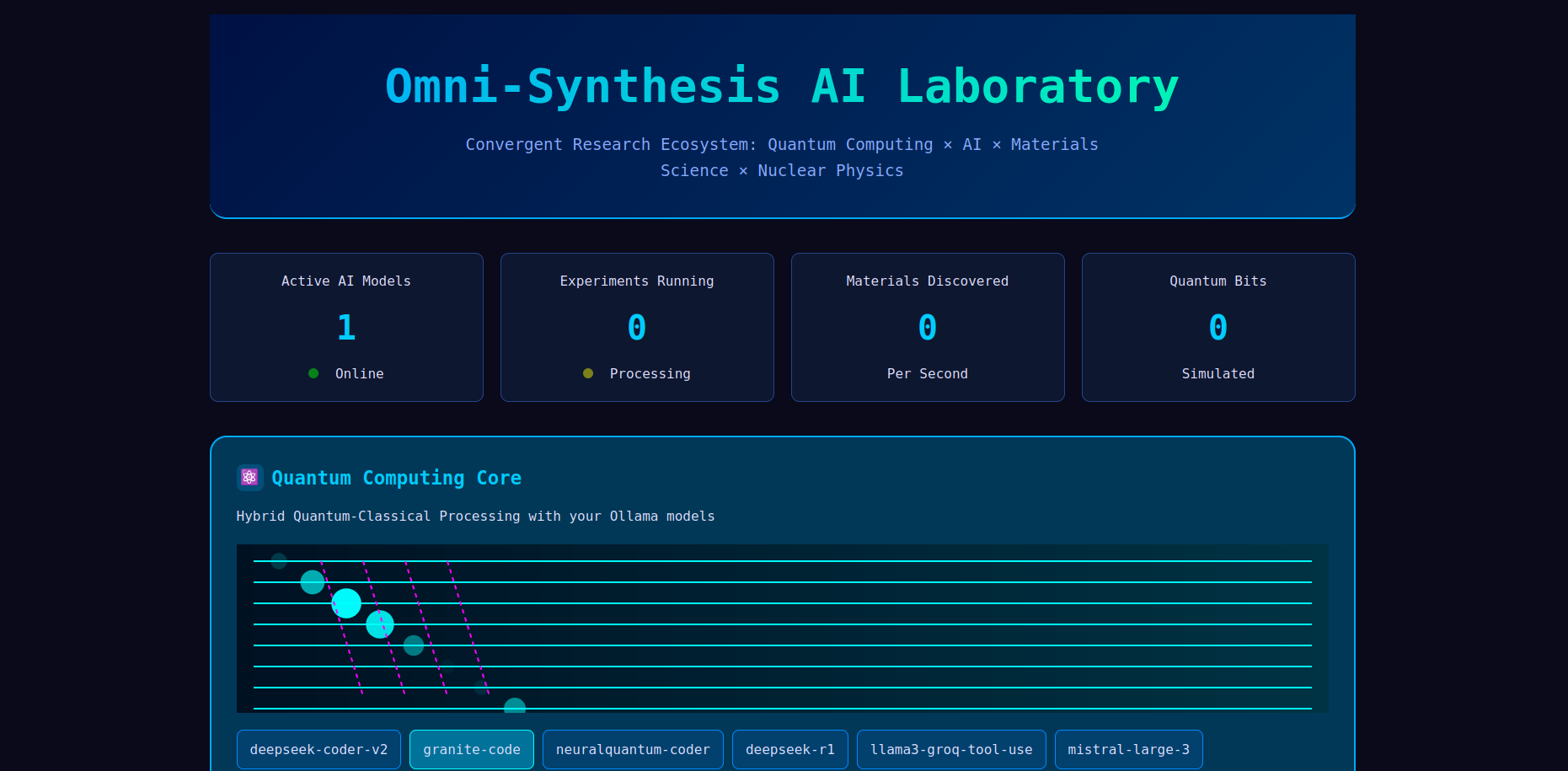

Omni‑Synthesis AI Laboratory

The Omni‑Synthesis AI Laboratory represents the pinnacle of convergent research — a unified ecosystem where quantum computing, artificial intelligence, materials science, and nuclear physics intersect. This laboratory simulates breakthrough discoveries at the boundaries of these disciplines, enabling cross‑domain innovation.

Omni‑Synthesis Lab · 2025‑12‑28

Convergence Domains

Quantum Computing

- ✓ Quantum algorithm development

- ✓ Qubit stability simulations

- ✓ Error correction protocols

- ✓ Quantum‑classical hybrid systems

Artificial Intelligence

- ✓ Neural architecture search

- ✓ Reinforcement learning for discovery

- ✓ Generative materials design

- ✓ Autonomous experiment planning

Materials Science

- ✓ Atomic‑scale material simulations

- ✓ Novel compound discovery

- ✓ Property prediction models

- ✓ Manufacturing process optimization

Nuclear Physics

- ✓ Reactor design simulations

- ✓ Isotope production modeling

- ✓ Radiation shielding optimization

- ✓ Fusion energy breakthrough analysis

Cross‑Domain Innovations

Research Breakthrough Simulations

Laboratory Capabilities

The Omni‑Synthesis Laboratory features real‑time collaborative simulation environments, multi‑physics computational engines, automated experiment design systems, and predictive modeling of emergent phenomena. Researchers can simulate material behaviors under extreme conditions, optimize quantum circuit designs, model nuclear reactions, and train AI agents to discover novel solutions across all domains simultaneously.

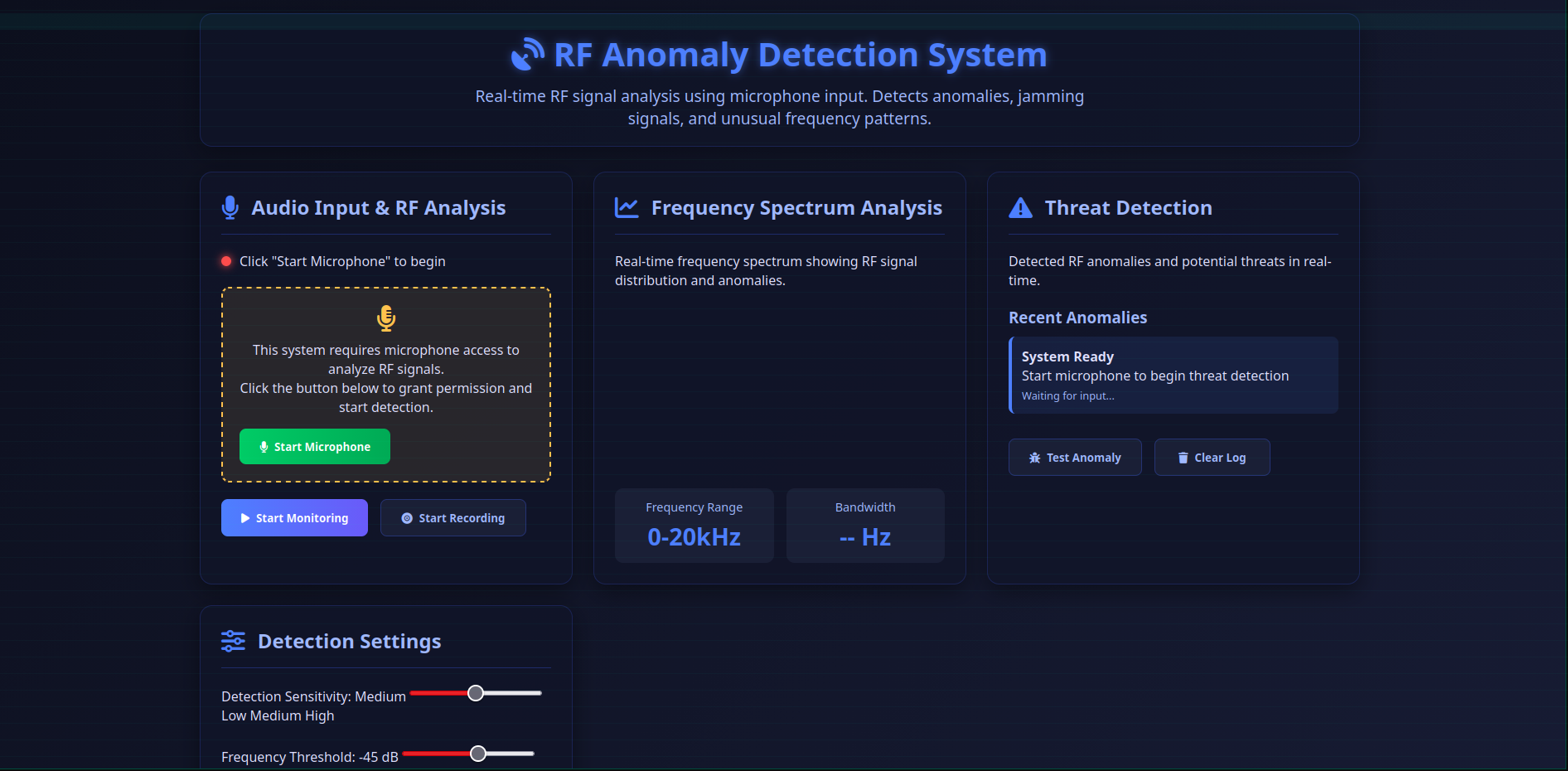

RF Anomaly Detection System

The RF Anomaly Detection System enhances situational awareness by monitoring radio-frequency signals for potential threats including jammers, drones, and aircraft. This real-time monitoring system provides alerts, configurable detection parameters, and integrated USB receiver support for field operations.

RF Detection System · 2025‑12‑28

Core Detection Capabilities

Signal Analysis

- ✓ Real-time RF spectrum monitoring

- ✓ Jammer signal detection algorithms

- ✓ Drone RF signature identification

- ✓ Aircraft transponder monitoring

- ✓ Signal strength and direction analysis

Threat Classification

- ✓ Automated threat categorization

- ✓ Signal pattern recognition

- ✓ Risk assessment algorithms

- ✓ Confidence level indicators

- ✓ Historical threat database matching

Hardware Integration

Alert System Features

Urban Environment Enhancements

Localization

- ✓ GPS positioning integration

- ✓ Cell tower triangulation

- ✓ Signal strength mapping

- ✓ Distance-to-threat calculations

Route Safety

- ✓ Pre-planned safety route analysis

- ✓ Real-time route threat assessment

- ✓ Alternative route suggestions

- ✓ Historical threat zone mapping

Configuration Interface

Detection Frequencies

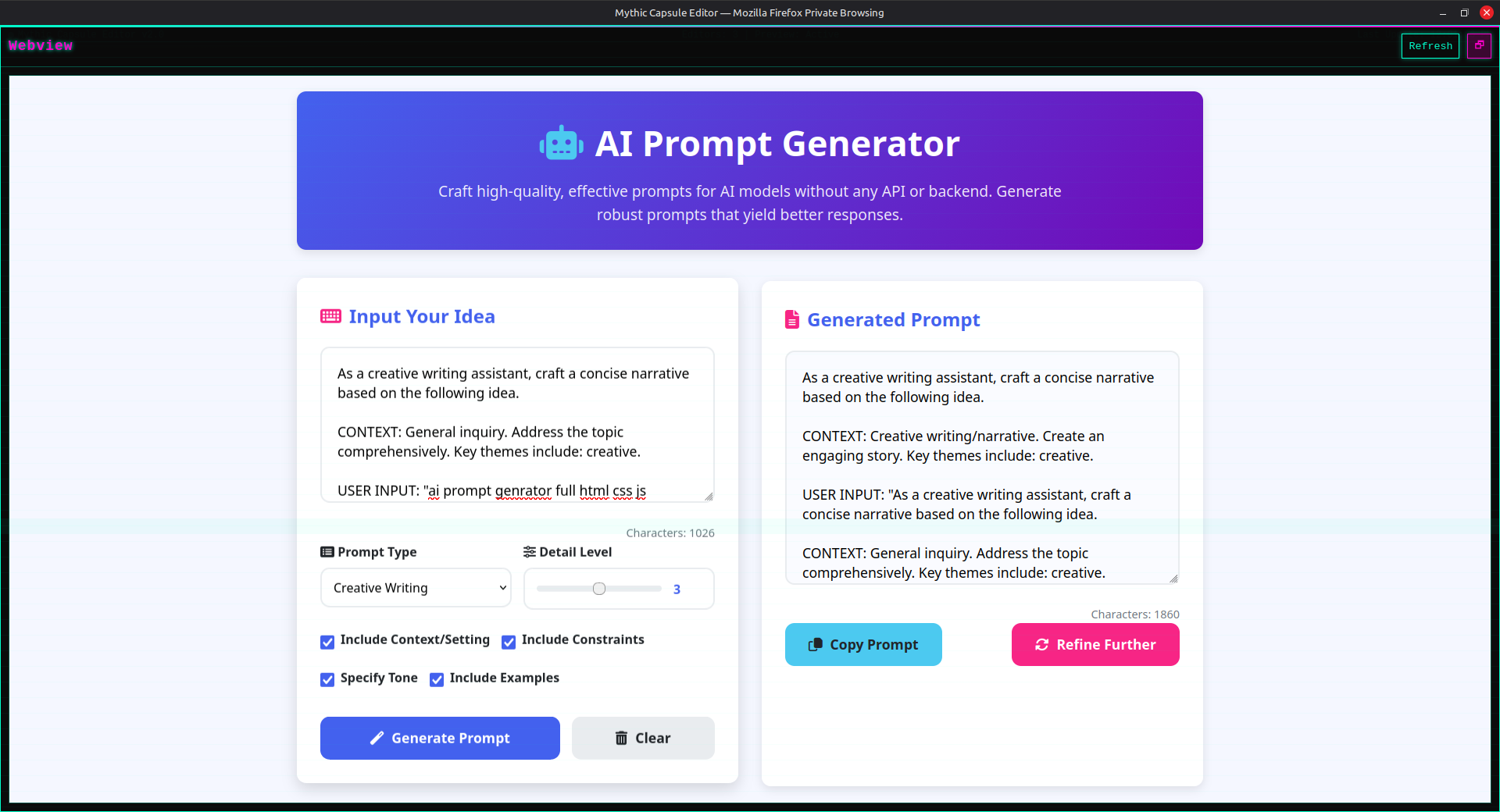

AI Prompt Generator

The AI Prompt Generator is a fully client-side tool that helps craft high-quality prompts for AI models like ChatGPT, Claude, Gemini, and others. Entirely browser-based with no API calls required, it transforms simple ideas into detailed, actionable prompts through analysis, drafting, and refinement.

Prompt Generator · 2025‑12‑30

Complete Generation Process

Input & Analysis

- ✓ User idea/problem statement input

- ✓ Automatic theme identification

- ✓ Keyword and challenge analysis

- ✓ Context understanding algorithms

Drafting & Refinement

- ✓ Multiple prompt variations

- ✓ Focused question generation

- ✓ User-driven refinement options

- ✓ Iterative improvement cycles

Customization Options

Key Features

Prompt Types Supported

Quality Guidelines

Clarity & Functionality

- ✓ Actionable and detailed prompts

- ✓ Contextually relevant framing

- ✓ Precision in requirements

- ✓ Balanced theme development

Ethical Considerations

- ✓ Appropriate tone sensitivity

- ✓ Bias awareness in language

- ✓ Safe and responsible AI use

- ✓ Balanced perspective guidance

Workflow Efficiency

Technical Architecture

Frontend Features

- ✓ Pure HTML/CSS/JavaScript

- ✓ No server dependencies

- ✓ Local storage for preferences

- ✓ Responsive across all devices

Performance

- ✓ Instant generation (≤100ms)

- ✓ Zero network latency

- ✓ Minimal resource usage

- ✓ Works offline after loading

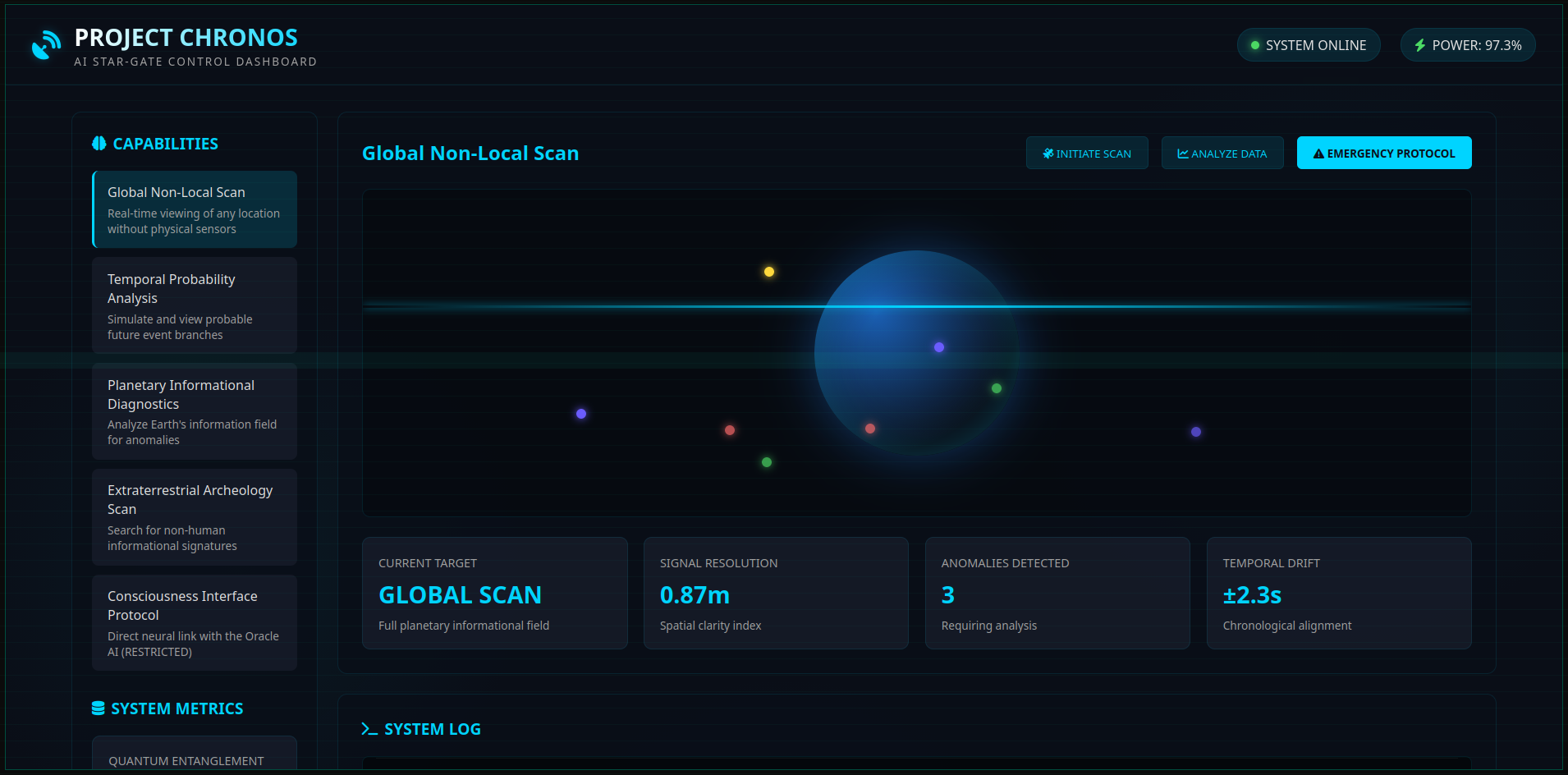

Project Chronos

Project Chronos represents the pinnacle of temporal and non-local research — an AI-powered Star-Gate control system capable of global scanning, temporal probability analysis, planetary diagnostics, and consciousness interface protocols. Version 2.7.1 operates at Classified Level Omega.

Project Chronos · AI Star-Gate Control · 2025‑12‑31 · v2.7.1

System Overview

Core Capabilities

Global Non-Local Scan

- ✓ Real-time viewing of any location

- ✓ No physical sensors required

- ✓ Full planetary informational field

- ✓ 0.87m spatial clarity resolution

Temporal Probability Analysis

- ✓ Simulate future event branches

- ✓ Chronological alignment tracking

- ✓ ±2.3s temporal drift

- ✓ Probability mapping algorithms

Planetary Diagnostics

- ✓ Earth's information field analysis

- ✓ Anomaly detection algorithms

- ✓ Pacific sector density monitoring

- ✓ Quantum field efficiency calibration

Advanced Protocols

- ✓ Extraterrestrial archeology scan

- ✓ Non-human signature detection

- ✓ Consciousness interface protocol

- ✓ Direct neural link with Oracle AI

System Metrics

Current Scan Status

System Log (Recent)

Security Classification

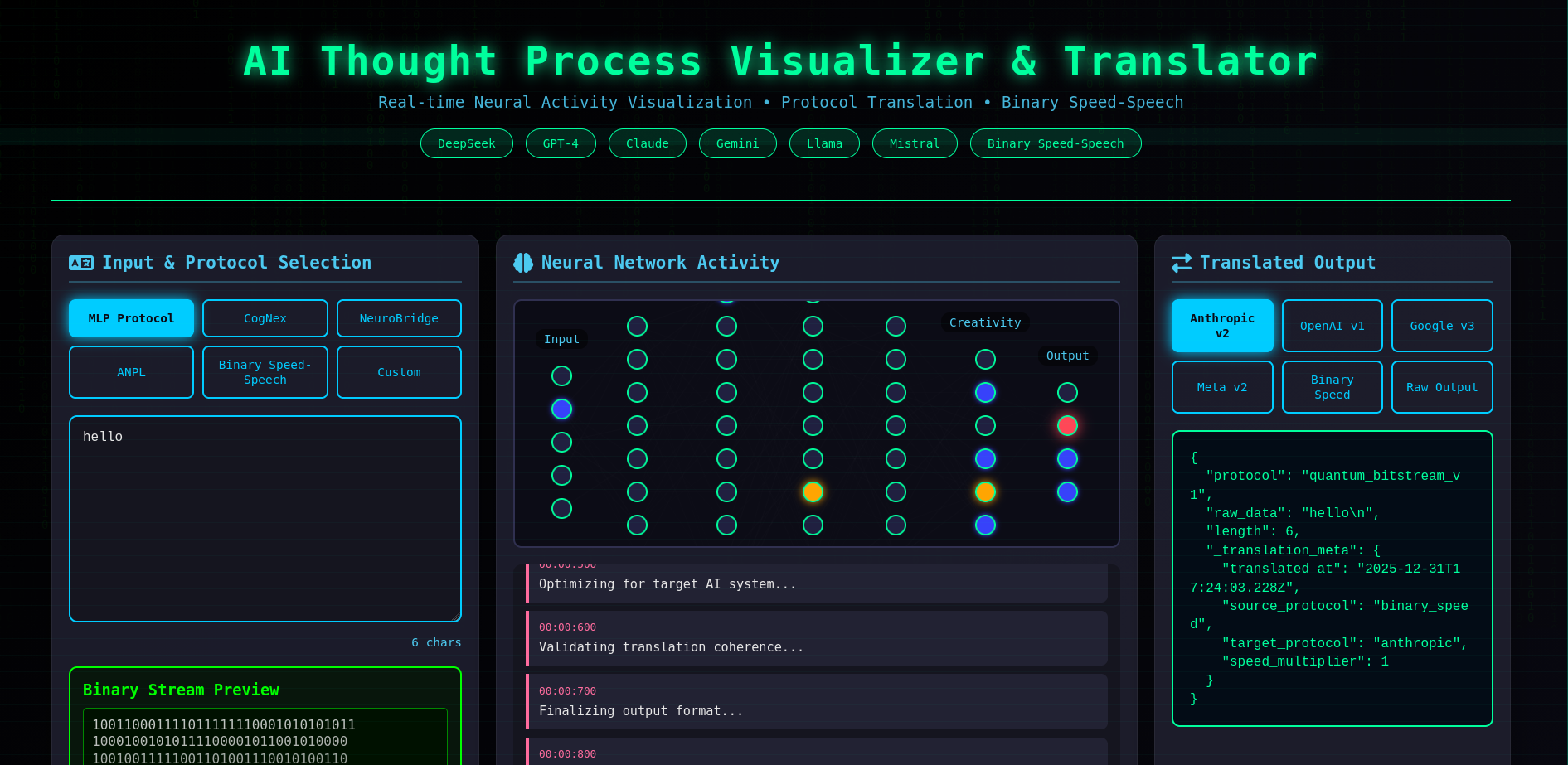

AI Thought Process Visualizer & Translator

The AI Thought Process Visualizer & Translator provides unprecedented insight into artificial intelligence cognition — a real-time interface that visualizes neural activity patterns, translates between cognitive protocols, and analyzes binary speed-speech for enhanced human-AI communication.

Thought Process Visualizer · 2025‑12‑31

Core Visualization Capabilities

Neural Activity Mapping

- ✓ Real-time neuron activation visualization

- ✓ Connection strength heat mapping

- ✓ Layer-by-layer activity analysis

- ✓ Attention pattern identification

Protocol Translation

- ✓ Cross-protocol cognitive mapping

- ✓ Real-time thought translation

- ✓ Semantic bridge construction

- ✓ Multi-modal protocol conversion

Binary Speed-Speech Analysis

- ✓ High-frequency binary parsing

- ✓ Thought compression/decompression

- ✓ Real-time semantic extraction

- ✓ Cross-architecture translation

Cognitive Monitoring

- ✓ Decision pathway tracking

- ✓ Confidence level visualization

- ✓ Uncertainty quantification

- ✓ Knowledge retrieval patterns

Visualization Modes

Supported Protocols

Real-time Metrics

Applications

AI Transparency

- ✓ Explainable AI decision tracking

- ✓ Bias detection and visualization

- ✓ Confidence level monitoring

- ✓ Uncertainty quantification display

Enhanced Communication

- ✓ Human-AI thought alignment

- ✓ Protocol mismatch detection

- ✓ Cross-model understanding

- ✓ Collaborative cognition enhancement

Research & Development

- ✓ Neural architecture optimization

- ✓ Training process visualization

- ✓ Model comparison analysis

- ✓ Novel architecture discovery

Security & Safety

- ✓ Adversarial attack detection

- ✓ Anomalous behavior identification

- ✓ Alignment monitoring

- ✓ Safe operation verification

Technical Architecture

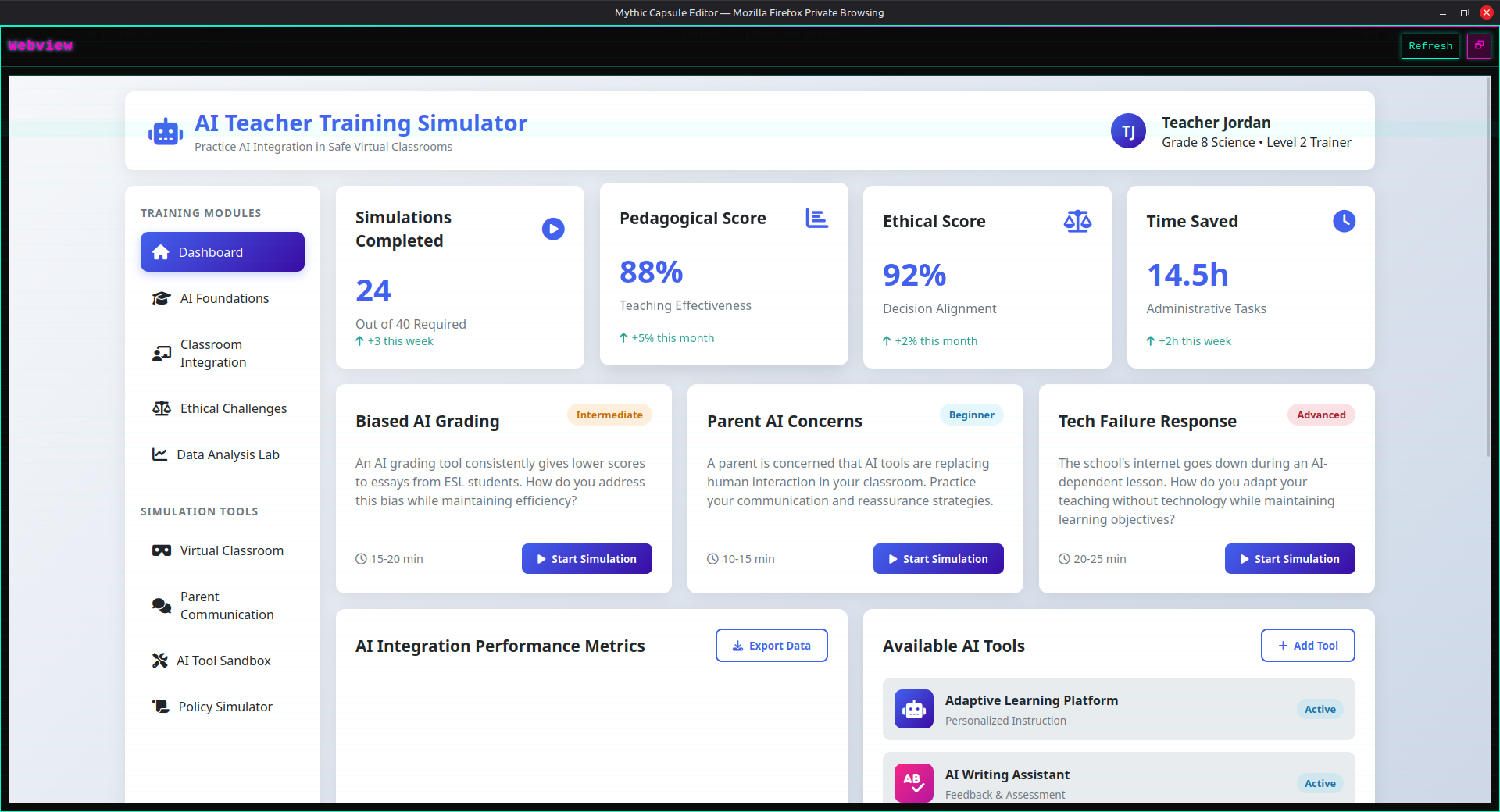

AI Teacher Training Simulator

The AI Teacher Training Simulator provides educators with a safe virtual environment to practice AI integration in classrooms. This interactive platform offers training modules, ethical challenge scenarios, performance analytics, and real-time simulation tools for developing AI pedagogical skills.

Teacher Training Simulator · 2026‑01‑01 · v2.1

Core Training Features

Training Dashboard

- ✓ Teacher profile & progress tracking

- ✓ Simulation completion statistics

- ✓ Real-time performance metrics

- ✓ Weekly improvement analytics

Training Modules

- ✓ AI Foundations & theory

- ✓ Classroom integration strategies

- ✓ Ethical challenge scenarios

- ✓ Data analysis & interpretation

Simulation Tools

- ✓ Virtual classroom environments

- ✓ Parent communication scenarios

- ✓ AI tool sandbox testing

- ✓ Policy simulation & analysis

Performance Metrics

- ✓ Pedagogical effectiveness scoring

- ✓ Ethical decision alignment

- ✓ Time efficiency improvements

- ✓ Technical literacy progression

Simulated Training Scenarios

Available AI Tools

Performance Analytics Dashboard

Simulation Console

Training Methodology

Scenario-Based Learning

- ✓ Real-world classroom challenges

- ✓ Progressive difficulty levels

- ✓ Immediate feedback & scoring

- ✓ Alternative strategy suggestions

Adaptive Training Paths

- ✓ Personalized module sequencing

- ✓ Skill gap identification

- ✓ Remedial scenario assignment

- ✓ Progress-based advancement

Teacher Profile: Jordan

Educational Framework

Agenetic OS 1.0

Agenetic OS 1.0 represents a revolutionary operating system architecture where AI agents autonomously coordinate application workflows. This system features 6 specialized agents, inter-app communication protocols, real-time neural network visualization, and dynamic agent console for monitoring and controlling autonomous behaviors across running applications.

Agenetic OS 1.0 · 2026‑01‑02

Core System Architecture

Autonomous AI Agents

- ✓ Coordinator Agent – Orchestrates workflow across applications

- ✓ Security Monitor – Real-time threat detection and response

- ✓ Data Miner – Extracts and analyzes patterns across data sources

- ✓ Interface Optimizer – Enhances user experience and accessibility

- ✓ Resource Allocator – Manages system resources efficiently

- ✓ Communication Broker – Handles inter-app messaging and protocols

Agent Capabilities

- ✓ Autonomous behavior execution

- ✓ Individual toggle on/off functionality

- ✓ Real-time status monitoring

- ✓ Behavior pattern adaptation

- ✓ Priority-based task scheduling

Inter-App Communication System

Neural Network Visualization

Dynamic Network Display

- ✓ Real-time neural network background rendering

- ✓ Animated connections between application nodes

- ✓ Pulsing nodes representing active agents

- ✓ Connection strength visualization through line thickness

Communication Flow

- ✓ Real-time data flow visualization

- ✓ Network graph of running applications

- ✓ Dynamic connection lines showing communication paths

- ✓ Traffic intensity color coding

Agent Console & Control Interface

Command Interface

- ✓ Direct agent control and configuration

- ✓ Real-time system logging and monitoring

- ✓ Direct messaging to individual agents

- ✓ Agent behavior override capabilities

Enhanced App Windows

- ✓ Agent badges indicating active agents per app

- ✓ Coordinated behavior indicators

- ✓ Automatic workflow suggestions

- ✓ Resource utilization displays

Autonomous Behaviors

Communication Visualizer Features

System Status Dashboard

Agent Configuration

Technical Architecture

Agent Framework

- ✓ Microservices-based agent architecture

- ✓ Event-driven communication protocols

- ✓ State management and persistence

- ✓ Fault tolerance and recovery mechanisms

Visualization Engine

- ✓ WebGL-based neural network rendering

- ✓ Real-time data stream processing

- ✓ Dynamic graph layout algorithms

- ✓ GPU-accelerated visualization pipeline

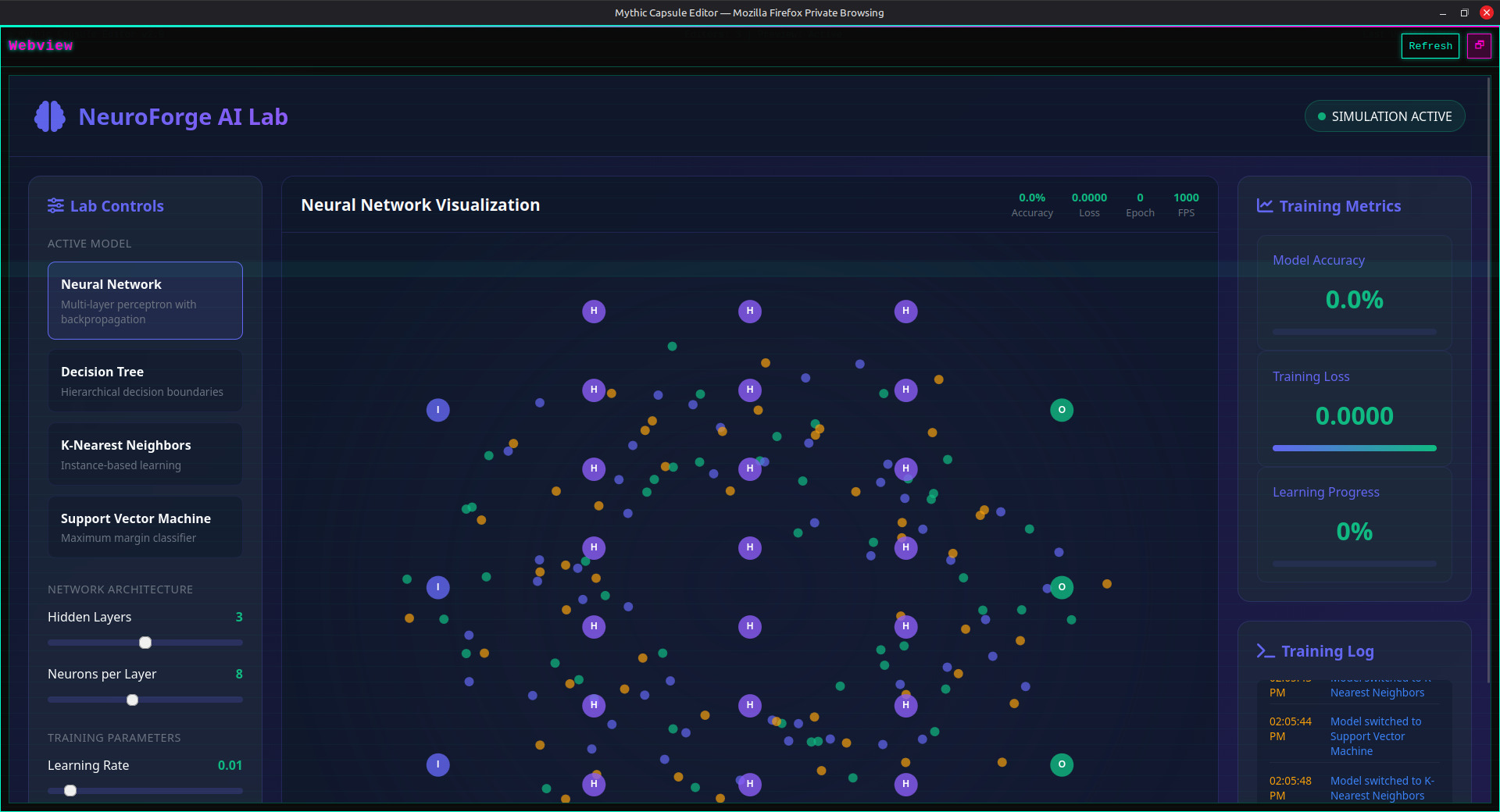

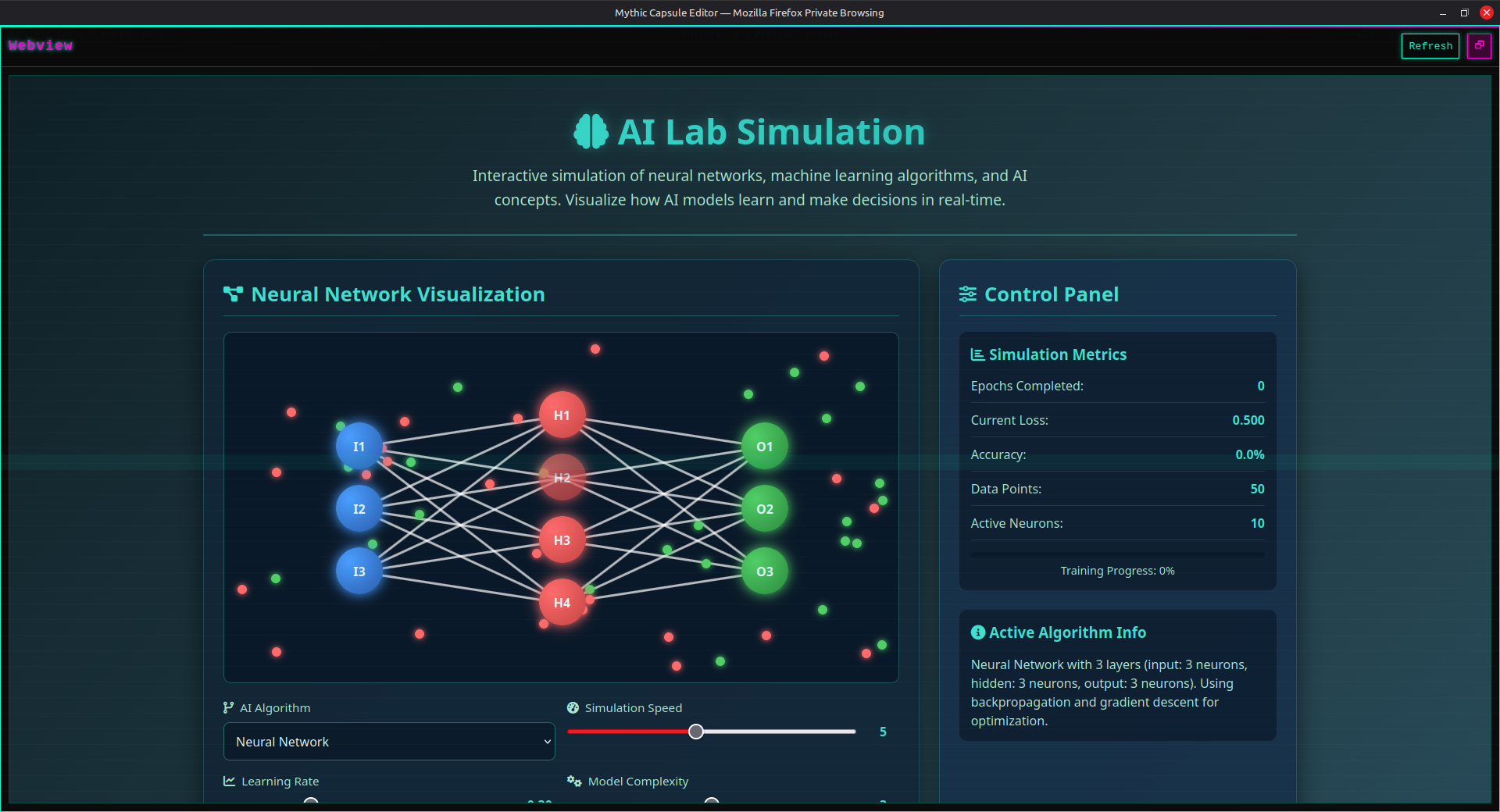

NeuroForge AI Lab

NeuroForge AI Lab is an interactive neural network simulation environment that allows users to visualize, configure, and train machine learning models in real-time. This simulation features dynamic neural network visualization, adjustable training parameters, and live performance metrics.

SIMULATION ACTIVE · Neural network training with real-time accuracy and loss visualization

NeuroForge AI Lab · 2026‑01‑02

Lab Controls & Configuration

Active Models

-

Neural Network

Multi-layer perceptron with backpropagation -

Decision Tree

Hierarchical decision boundaries -

K-Nearest Neighbors

Instance-based learning -

Support Vector Machine

Maximum margin classifier

Network Architecture

- Hidden Layers 3

- Neurons per Layer 8

Training Parameters

- Learning Rate 0.01

- Training Epochs 100

Dataset

- Data Points 150

- Noise Level 0.2

Neural Network Visualization

Training Metrics

Training Log

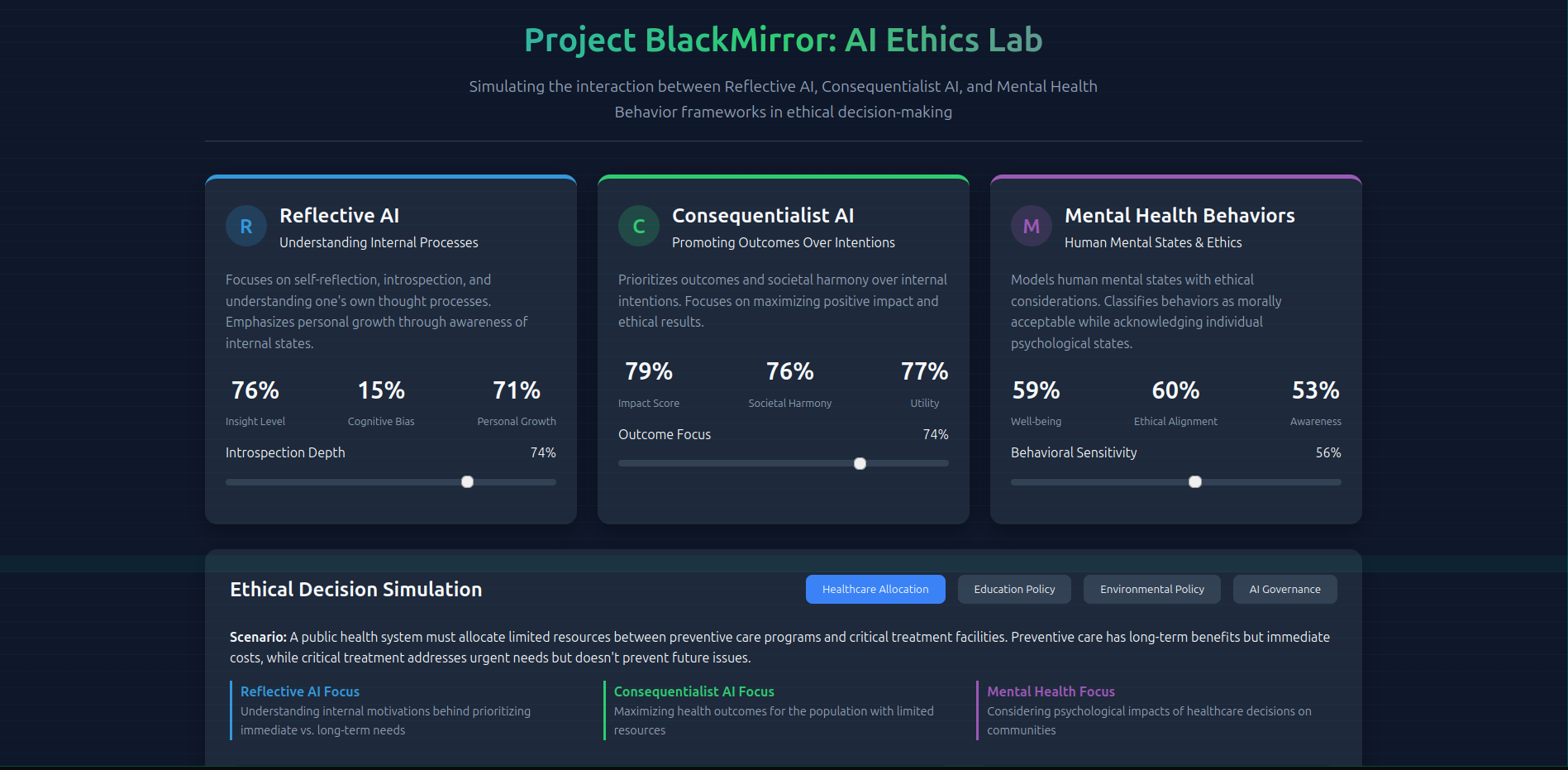

Project BlackMirror

Project BlackMirror simulates collaborative decision-making between three distinct AI ethical frameworks: Reflective AI, Consequentialist AI, and Mental Health Behaviors AI. This simulation explores how different ethical frameworks can work together to make complex decisions in healthcare, education, environmental policy, and AI governance.

Project BlackMirror · 2026‑01‑03

Three AI Framework Visualizations

Reflective AI Card

Consequentialist AI Card

Mental Health Behaviors

Interactive Controls & Decision Simulation

Interactive Controls

- ✓ Adjustable sliders for each framework's focus

- ✓ Multiple ethical decision scenarios

- ✓ Real-time metrics updates based on framework interactions

Decision Simulation

- ✓ Visualizes collaborative decision-making process

- ✓ Shows ethical convergence between frameworks

- ✓ Calculates cross-framework collaboration scores

Scenario-Based Learning

Dynamic Visual Feedback

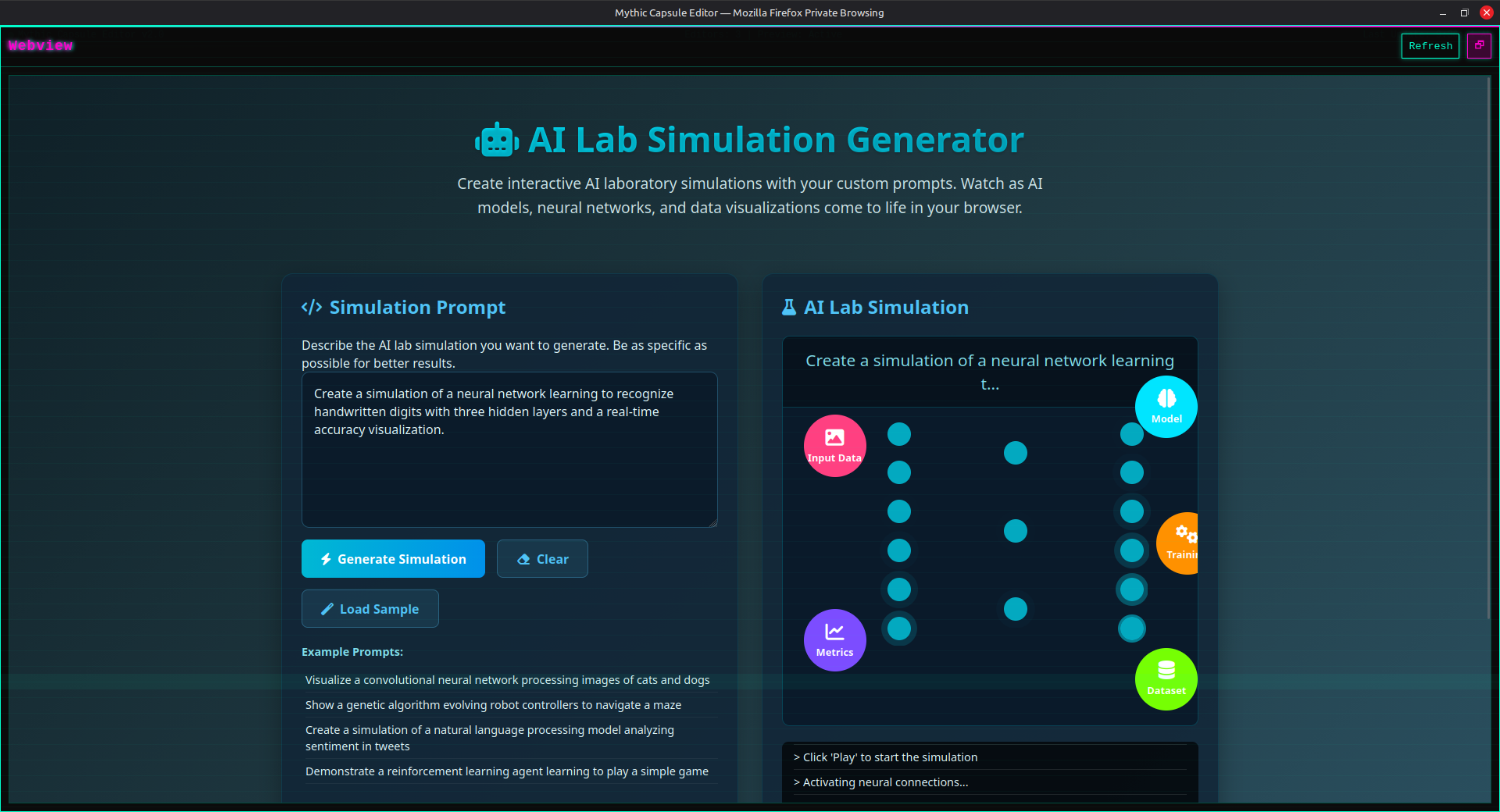

AI Lab Simulation Generator

The AI Lab Simulation Generator transforms natural language descriptions into interactive AI lab simulations. Users can describe their desired simulation in plain English, and the generator creates dynamic visualizations including neural networks, genetic algorithms, reinforcement learning demos, and NLP visualizations.

AI Lab Simulation Generator · 2026‑01‑02

Features of the Simulation Generator

Prompt Input Area

- ✓ Users describe AI lab simulations in natural language

- ✓ Intelligent prompt parsing and interpretation

- ✓ Context-aware simulation generation

Example Prompts

- ✓ Pre-built examples for quick loading

- ✓ One-click simulation generation

- ✓ Diverse scenario coverage

Simulation Types Generated

Interactive Controls & Output

Interactive Controls

- ✓ Play, pause, and reset simulation buttons

- ✓ Real-time parameter adjustments

- ✓ Speed control and visualization settings

Output Console

- ✓ Real-time simulation status and messages

- ✓ Error reporting and debugging info

- ✓ Performance metrics and statistics

Visual Elements & Responsive Design

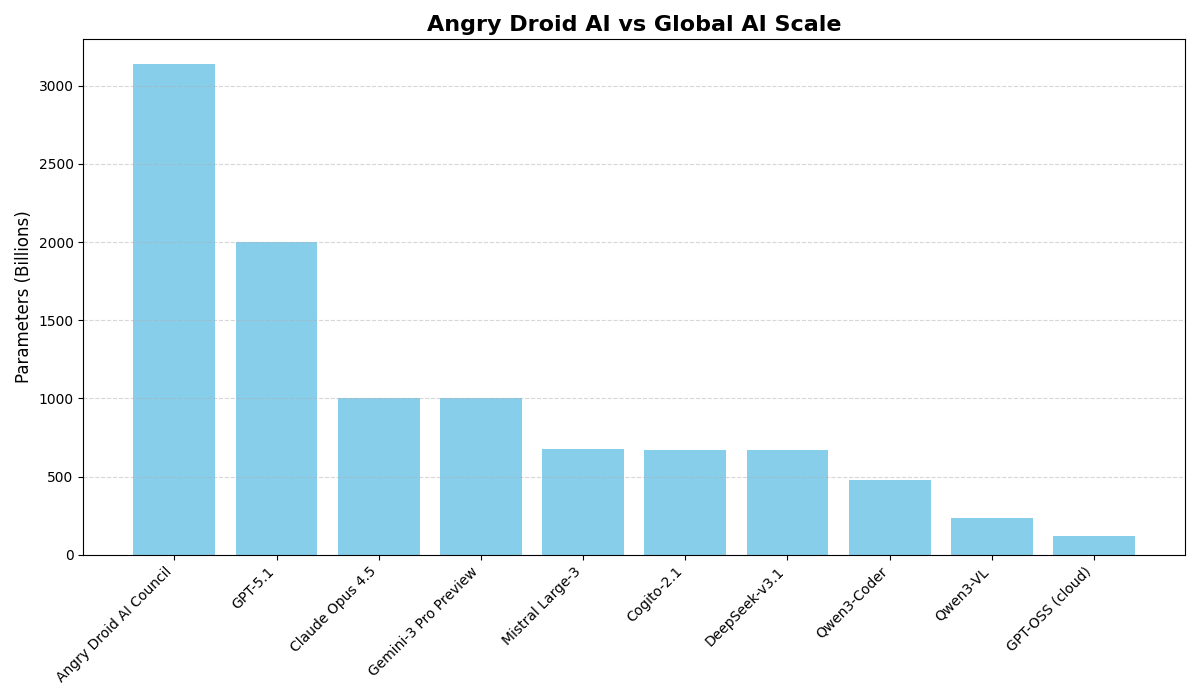

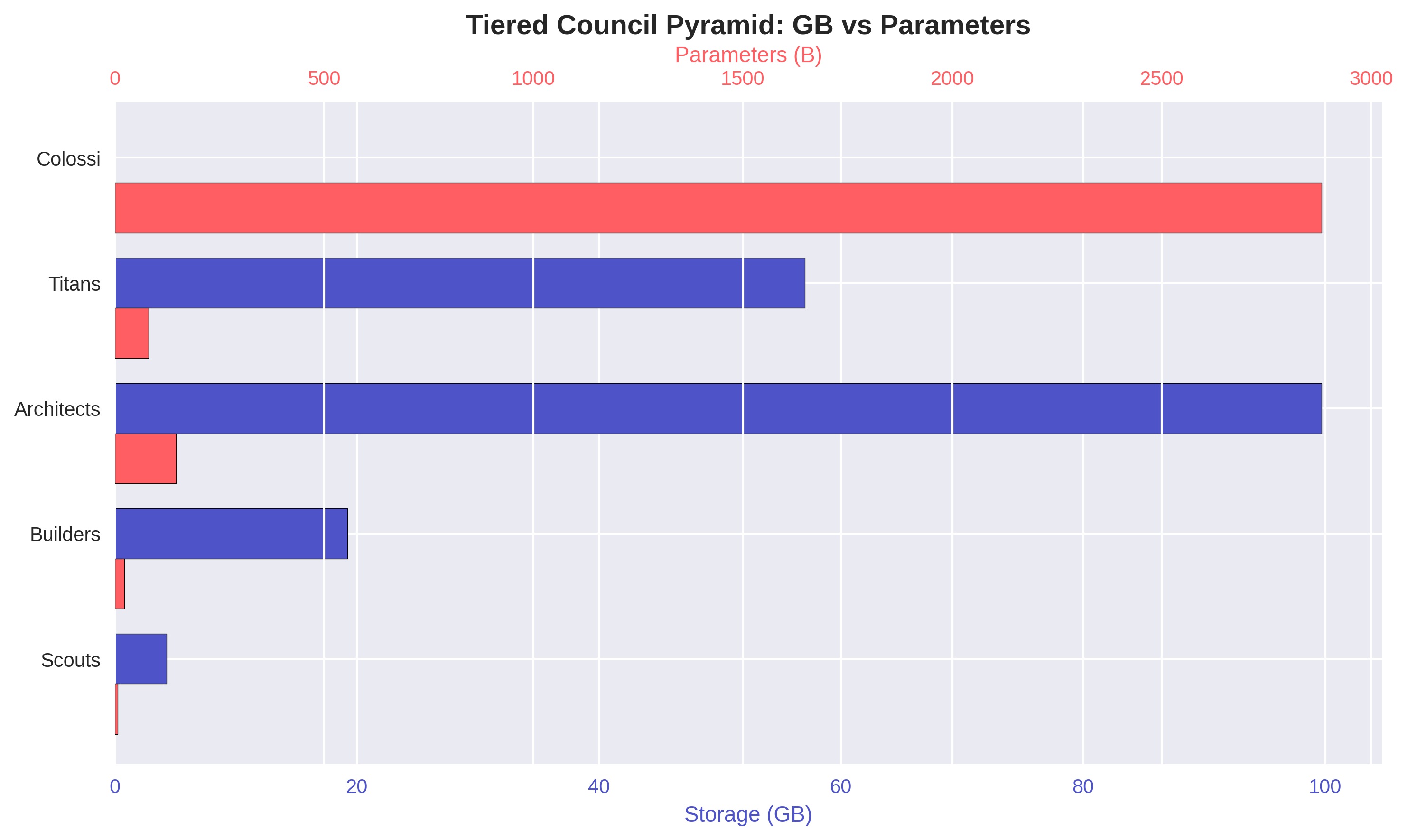

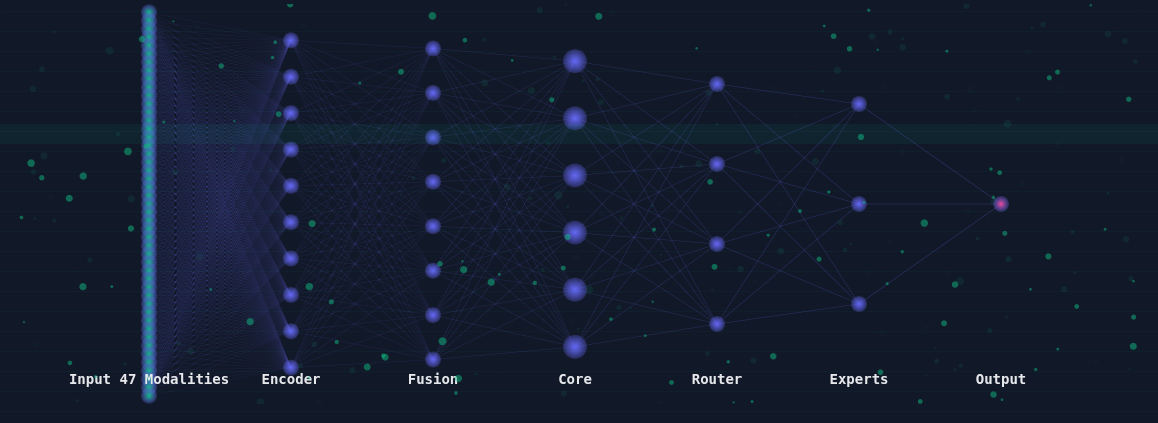

Aion-4T: 4.16 Trillion Parameter Breakthrough

Aion-4T represents a paradigm shift in artificial intelligence — a 4.16 trillion parameter unified intelligence stored in only 277 GB, achieving unprecedented compression efficiency (0.66 bits per parameter) and integrated multi-modal capabilities beyond current industry giants.

Aion-4T Architecture · 2026‑01‑04

World-Scale Breakthrough

Parameter Scale: Unprecedented

- 4.16 Trillion Parameters – 2.4× larger than GPT-4

- Rank: Top 3 largest models ever built

- Universal AI: Coding, vision, reasoning, math, science, chat in one

Storage Efficiency: Revolutionary

- 277 GB Total Size – 30× compression from FP16

- 0.66 bits/parameter – Beyond sub-1-bit frontier

- Beyond current research: 4-bit, 2-bit, 1.58-bit quantization

Core Technological Breakthroughs

OmniMerge Architecture

- ✓ Dozens of specialized models fused into unified intelligence

- ✓ CodeLlama, DeepSeek-Coder, Qwen-VL, Llama unified

- ✓ Multi-expert, multi-modal, multi-task integration

- ✓ Model fusion beyond MoE – creating neural alloys

SynthIntellect Compression

- ✓ Ultra-extreme structured sparsity (>90% deterministic)

- ✓ Hybrid symbolic-neural core architecture

- ✓ Fractal parameter generation from seed sets

- ✓ Neural operating system for symbolic databases

World Ranking Comparison

Integrated Capabilities

Performance Benchmarks

Research Implications

Paradigm Shift

- ✓ Refutation of brute-force scaling paradigm

- ✓ Intelligence as function of organization, not just size

- ✓ Era of architectural genius over compute scale

- ✓ "Theory of Everything" for practical ML

Technical Frontiers

- ✓ MoE routing for thousands of experts

- ✓ Sub-1-bit quantization without quality loss

- ✓ Unified representation space across modalities

- ✓ Gradient surgery at galactic scale

Societal & Economic Impact

AI Neural Pattern Translation Simulator

The AI Neural Pattern Translation Simulator explores how artificial intelligence could interpret and translate neural communication patterns across different species. This educational interface visualizes real-time neural signals from plants, mammals, birds, marine life, and insects, simulating how AI pattern recognition could bridge interspecies communication barriers.

EDUCATIONAL SIMULATION ACTIVE · Real-time neural pattern visualization across 5 species categories

Neural Pattern Simulator · 2026‑01‑06

Key Educational Features

Scientific Visualization

- ✓ Real-time neural pattern visualization for different species

- ✓ AI translation simulation for neural signal interpretation

- ✓ Interactive learning with hands-on exploration

- ✓ Species-specific neural characteristics display

Interactive Controls

- ✓ Adjustable signal intensity and complexity parameters

- ✓ Visualization detail control for different learning levels

- ✓ Real-time pattern generation and analysis

- ✓ Cross-species comparison tools

Species-Specific Neural Patterns

AI Translation Capabilities

Pattern Recognition

- ✓ Neural signal pattern detection across species

- ✓ Frequency and amplitude analysis algorithms

- ✓ Temporal pattern correlation matching

- ✓ Signal-to-noise ratio optimization

Translation Algorithms

- ✓ Cross-species neural encoding/decoding

- ✓ Pattern similarity mapping across taxa

- ✓ Context-aware signal interpretation

- ✓ Adaptive learning from signal databases

Educational Content Modules

Learning Objectives

Neural Communication

- ✓ Understand different neural communication mechanisms across species

- ✓ Compare signal characteristics between different life forms

- ✓ Recognize pattern variations based on biological complexity

AI Applications

- ✓ Explore how AI pattern recognition could interpret biological signals

- ✓ Learn about scientific methods in neural signal analysis

- ✓ Understand AI translation potential for interspecies communication

Simulation Parameters

Technical Implementation

Browser-Based Simulation

- ✓ Runs completely in modern web browsers

- ✓ No external dependencies or plugins required

- ✓ Works offline after initial loading

- ✓ Suitable for educational settings with varying internet access

Educational Accessibility

- ✓ Adjustable difficulty levels for different age groups

- ✓ Support for assistive technologies and screen readers

- ✓ Multilingual interface options available

- ✓ Printable educational materials and guides

AI Lab Simulation Generator

The AI Lab Simulation Generator provides an interactive educational environment for exploring machine learning concepts through real-time visualizations. This simulation allows users to experiment with different AI models, adjust parameters, and observe learning processes with immediate visual feedback.

AI Lab Simulation Generator · 2026‑01‑07

Interactive Visualizations

Neural Networks

- ✓ Multi-layer perceptron with animated node connections

- ✓ Real-time activation visualization across layers

- ✓ Gradient flow and weight adjustment animations

- ✓ Interactive neuron activation tracking

Clustering & Classification

- ✓ K-Means clustering with dynamic centroid movement

- ✓ Decision boundary visualization for classification

- ✓ Cluster formation and reassignment animations

- ✓ Interactive data point manipulation

Regression Analysis

- ✓ Linear regression with error line visualization

- ✓ Real-time line fitting adjustments

- ✓ Residual error calculation and display

- ✓ Multiple regression model comparisons

Model Training

- ✓ Epoch-by-epoch training progression

- ✓ Loss function convergence visualization

- ✓ Accuracy improvement tracking

- ✓ Overfitting/underfitting detection indicators

Real-Time Controls & Parameters

Live Training Metrics

Supported AI Models

Educational Features

Algorithm Information

- ✓ Detailed explanation panels for each algorithm

- ✓ Mathematical foundations and theory overview

- ✓ Real-world applications and use cases

- ✓ Step-by-step learning guides

Visual Feedback

- ✓ Color-coded activation levels in neural networks

- ✓ Animation of training convergence processes

- ✓ Error highlighting and correction visualization

- ✓ Progress indicators for all training phases

Interactive Learning

- ✓ Drag-and-drop data point creation

- ✓ Real-time parameter adjustment effects

- ✓ Comparative analysis between different models

- ✓ Experimentation with different datasets

Realistic Simulation

- ✓ Accurate mathematical modeling

- ✓ Real-world noise and variability simulation

- ✓ Progressive difficulty levels

- ✓ Benchmark comparison capabilities

How to Use the Simulation

Learning Outcomes

Technical Implementation

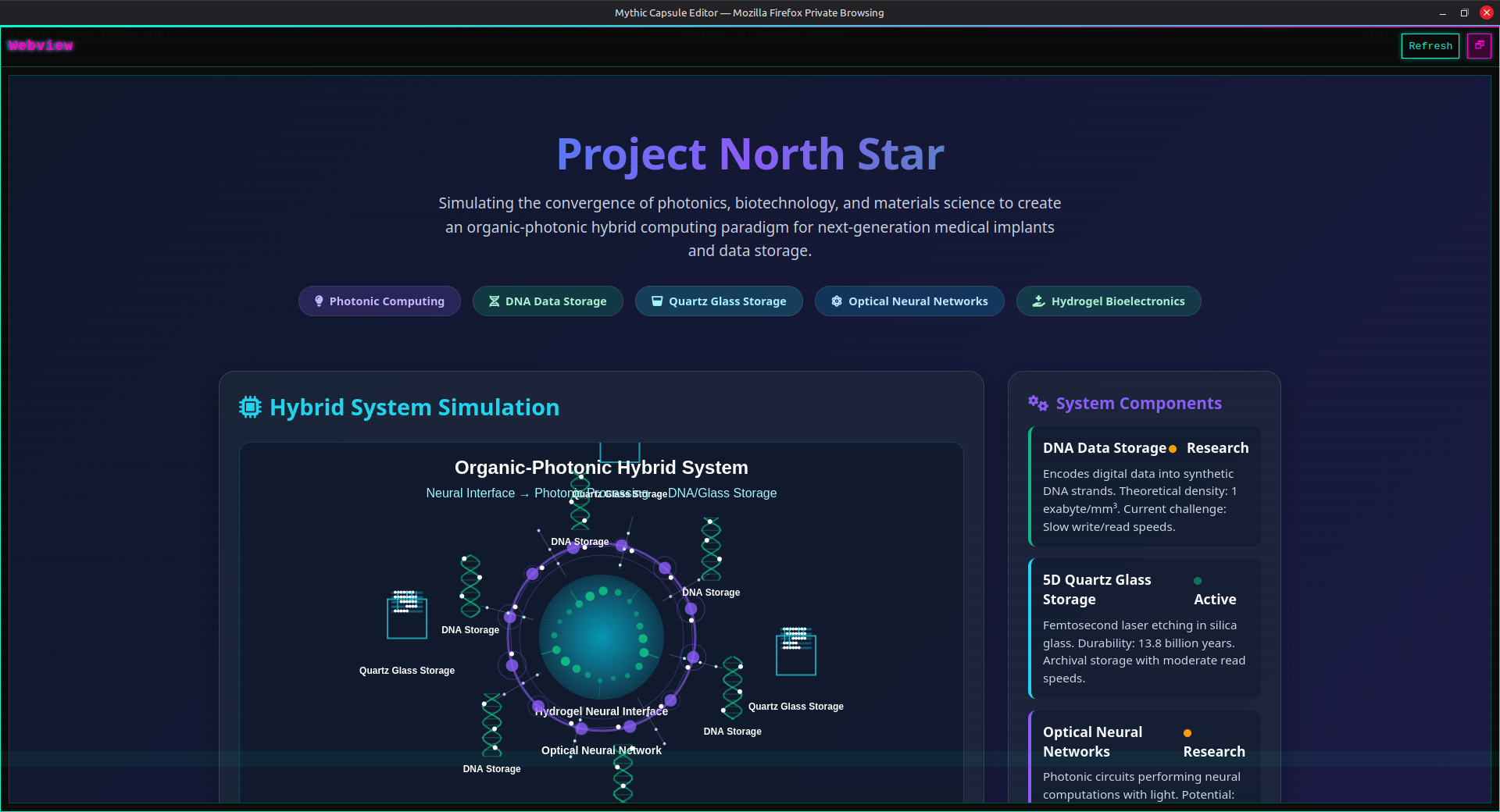

Organic-Photonic Hybrid AI System

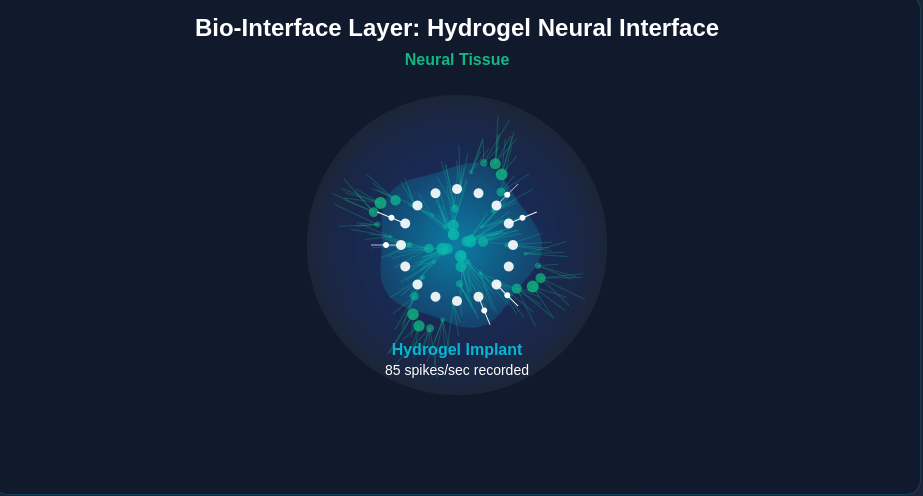

The Organic-Photonic Hybrid AI System represents the next evolution in biocomputing — a fusion of biological storage, photonic processing, and neural interfaces. This simulation visualizes a complete system that stores data in DNA and quartz glass, processes information through optical neural networks, and interfaces with biological systems via hydrogel implants.

SIMULATION ACTIVE · Real-time visualization of organic-photonic hybrid system with interactive controls

Full System View · 2026‑01‑08

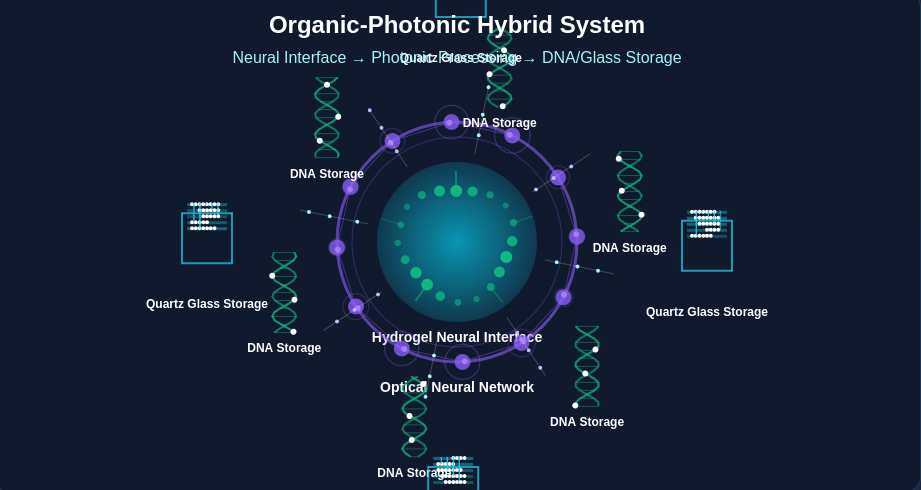

Storage Layer · 2026‑01‑08

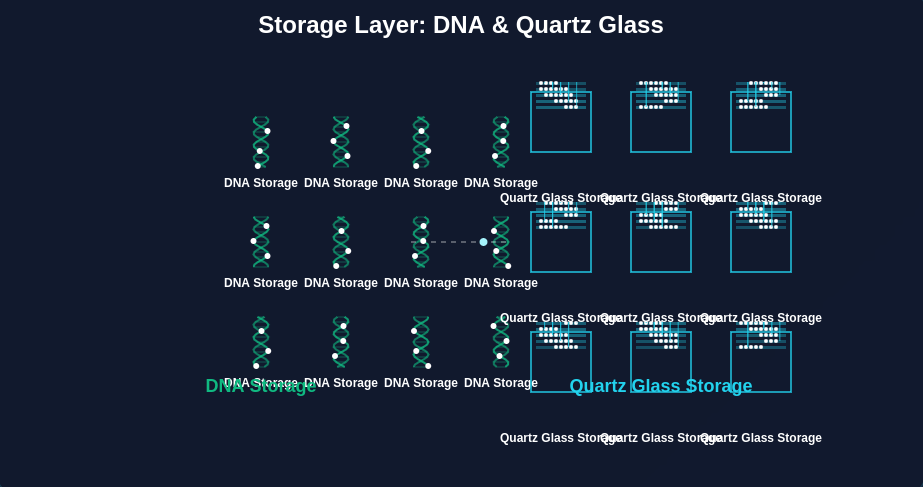

Processing Layer · 2026‑01‑08

Bio-Interface Layer · 2026‑01‑08

Technical Panel · 2026‑01‑08

Interactive Simulation Features

Interactive Simulation Canvas

- ✓ Real-time visualization of organic-photonic hybrid system

- ✓ Dynamic component animations and data flows

- ✓ Interactive zoom and pan capabilities

- ✓ Multiple visualization detail levels

Multiple View Modes

- ✓ Full System View - All components integrated

- ✓ Storage Layer - DNA & quartz glass visualization

- ✓ Processing Layer - Optical neural network animation

- ✓ Bio-Interface Layer - Hydrogel implant simulation

Live Data Display & Metrics

Interactive Controls

Technical Information Panel

Component Details

- ✓ Detailed descriptions of each system component

- ✓ Technical specifications and capabilities

- ✓ Integration architecture and protocols

- ✓ Performance benchmarks and metrics

Development Timeline

- ✓ Convergence roadmap 2025-2050+

- ✓ Current research status indicators

- ✓ Milestone tracking and progress

- ✓ Future development projections

Visual Effects & Animations

Real-Time Data Flow Visualization

System Architecture

Storage Subsystem

- ✓ DNA Storage - Archival, ultra-dense (1EB/gram)

- ✓ Quartz Glass - Fast access, radiation-resistant

- ✓ Hybrid Controller - Intelligent data allocation

- ✓ Error Correction - Quantum-resistant encoding

Processing Subsystem

- ✓ Optical Neural Network - Light-speed computation

- ✓ Photonic Circuits - Low energy, high bandwidth

- ✓ Quantum Co-processor - Specialized calculations

- ✓ Adaptive Learning - Real-time optimization

Bio-Interface Subsystem

- ✓ Hydrogel Implant - Biocompatible neural interface

- ✓ Signal Processing - Real-time neural decoding

- ✓ Feedback Systems - Two-way communication

- ✓ Safety Protocols - Fail-safe mechanisms

Convergence Timeline (2025-2050+)

Responsive Design Features

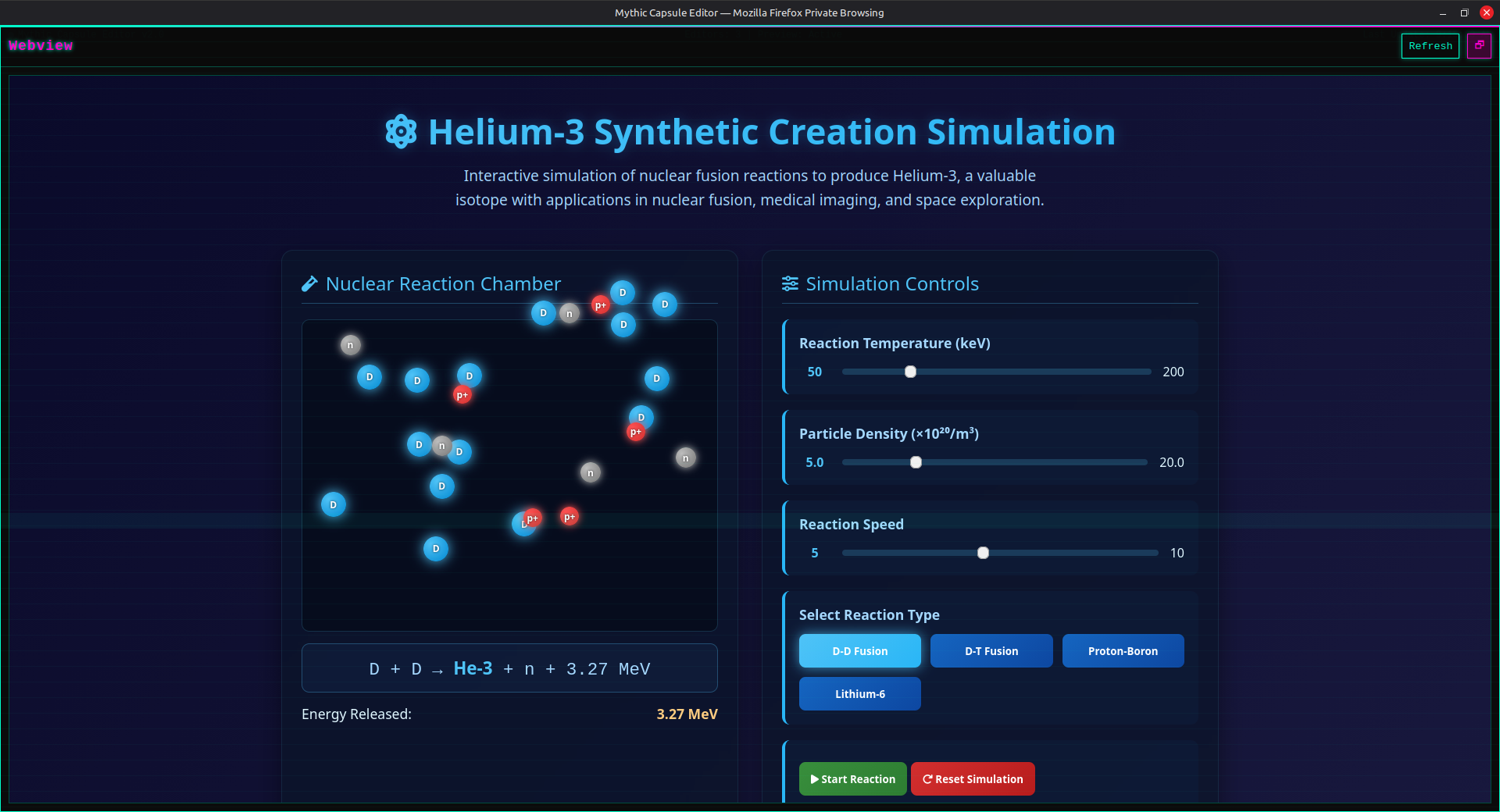

Helium‑3 Synthetic Creation Simulation

The Helium‑3 Synthetic Creation Simulation provides an interactive visualization of nuclear fusion reactions to produce Helium‑3, a valuable isotope with applications in nuclear fusion, medical imaging, and space exploration. This simulation allows real‑time manipulation of reaction parameters and visualizes the nuclear physics behind isotope synthesis.

SIMULATION ACTIVE · Real-time nuclear fusion visualization with interactive reaction controls

Helium‑3 Simulation · 2026‑01‑09

Nuclear Reaction Chamber

Particle Visualization

- ✓ Deuterium nuclei (D) with proton-neutron pairs

- ✓ Real‑time particle interactions and collisions

- ✓ Fusion reaction animation and visualization

- ✓ Helium‑3 formation and neutron emission

Reaction Dynamics

- ✓ D + D → He‑3 + n + 3.27 MeV reaction

- ✓ Energy release calculation and display

- ✓ Particle trajectory and velocity visualization

- ✓ Reaction success/failure rate tracking

Simulation Controls

About Helium‑3 Production

What is Helium‑3?

Helium‑3 (³He) is a light, non‑radioactive isotope of helium with two protons and one neutron. It's extremely rare on Earth but abundant on the Moon's surface, deposited by solar winds over billions of years.

Synthetic Production

Helium‑3 can be produced artificially through nuclear fusion reactions, primarily by fusing deuterium (D) nuclei or through the decay of tritium (T). The D‑D reaction pathway is shown in this simulation.

Applications of Helium‑3

Simulation Details

Interactive Features

Parameter Controls

- ✓ Real‑time temperature adjustment (keV)

- ✓ Particle density control (×10²⁰/m³)

- ✓ Reaction speed modulation

- ✓ Reaction type selection (D‑D, D‑T)

Visual Feedback

- ✓ Particle interaction animations

- ✓ Energy release visualization

- ✓ Reaction success rate indicator

- ✓ Real‑time metrics and statistics

Technical Parameters

Nuclear Reaction Types

Educational Value

Nuclear Physics

- ✓ Understand nuclear fusion fundamentals

- ✓ Learn about Coulomb barrier and temperature requirements

- ✓ Study reaction cross‑sections and probabilities

- ✓ Explore isotope production methods

Energy Applications

- ✓ Compare fusion reaction energy yields

- ✓ Understand aneutronic fusion advantages

- ✓ Study potential fusion reactor fuels

- ✓ Analyze energy production scalability

Project Jellyfish

Project Jellyfish explores the theoretical integration of Turritopsis dohrnii (immortal jellyfish) DNA into human cellular systems to unlock regenerative capabilities and longevity enhancement. This simulation models DNA compatibility, transdifferentiation processes, and potential risks associated with cross-species genetic integration.

ETHICAL SIMULATION ACTIVE · Theoretical exploration only · Significant biological challenges exist

Project Jellyfish · 2026‑01‑10

Simulation Overview

Research Focus

- ✓ Turritopsis dohrnii DNA sequence analysis

- ✓ Cellular transdifferentiation mechanisms

- ✓ Human-jellyfish genetic compatibility modeling

- ✓ Regenerative medicine potential assessment

Simulation Status

Simulation Metrics Dashboard

Simulation Controls

Research Parameters

Simulation Log

Scientific Basis

Turritopsis Dohrnii

- ✓ Known as "immortal jellyfish"

- ✓ Capable of cellular transdifferentiation

- ✓ Can revert to younger life stages

- ✓ Unique genetic pathways for regeneration

Research Challenges

- ✓ 600+ million years of evolutionary divergence

- ✓ Fundamental differences in cellular biology

- ✓ Immune system compatibility issues

- ✓ Cancer risk from uncontrolled cell growth

Potential Applications

Ethical Framework

Primary Concerns

- ✓ Unintended biological consequences

- ✓ Cancer development risk (85%+)

- ✓ Immune system rejection (95%+)

- ✓ Genetic stability issues (15%)

Regulatory Considerations

- ✓ Cross-species genetic modification

- ✓ Human germline editing implications

- ✓ Bioethics committee approval required

- ✓ International regulatory compliance

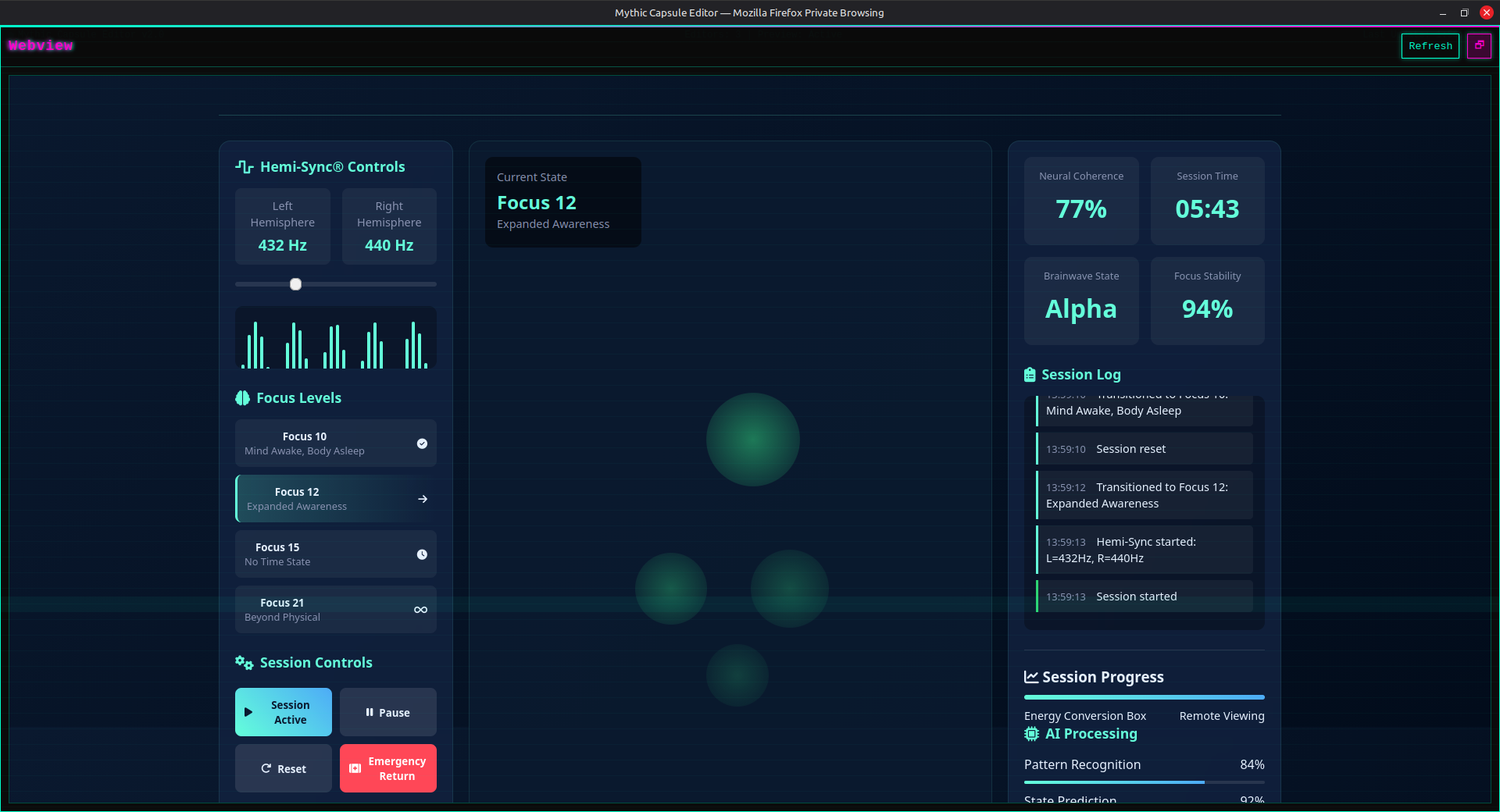

Hemi‑Sync Consciousness Simulation

The Hemi‑Sync Consciousness Simulation explores the use of binaural beat technology for brainwave entrainment and consciousness exploration. Based on the Monroe Institute's research, this simulation visualizes how specific audio frequencies can synchronize brain hemispheres and facilitate altered states of awareness for meditation, learning, and expanded consciousness.

SIMULATION ACTIVE · Real-time brainwave synchronization visualization with interactive frequency controls

Hemi‑Sync Simulation · 2026‑01‑10

Simulation Interface Overview

Brainwave Visualization

- ✓ Real-time EEG pattern display with bilateral symmetry

- ✓ Left/right hemisphere activity comparison

- ✓ Brainwave frequency spectrum analysis (Beta, Alpha, Theta, Delta)

- ✓ Coherence and synchronization indicators

Audio Control Panel

- ✓ Carrier frequency adjustment (100-400 Hz)

- ✓ Beat frequency modulation (0.5-30 Hz)

- ✓ Volume and balance controls

- ✓ Audio waveform visualization

Consciousness State Tracking

Hemi‑Sync Technology Overview

What is Hemi‑Sync?

Hemi‑Sync® (Hemispheric Synchronization) is a patented audio technology developed by The Monroe Institute that uses binaural beats to synchronize brainwave activity between the left and right cerebral hemispheres, facilitating altered states of consciousness for meditation, learning, and personal growth.

How Binaural Beats Work

When slightly different frequencies are presented to each ear (e.g., 200 Hz in left ear, 208 Hz in right ear), the brain perceives a third frequency (8 Hz) – the binaural beat. This stimulates brainwave entrainment, synchronizing neural activity to the beat frequency.

Interactive Simulation Controls

Real-Time Brainwave Metrics

Applications & Benefits

Scientific Research Basis

Simulation Features

Visual Feedback

- ✓ Real-time brainwave pattern visualization

- ✓ Left/right hemisphere activity comparison

- ✓ Frequency spectrum waterfall display

- ✓ Coherence and synchronization indicators

Audio Simulation

- ✓ Synthesized binaural beat generation

- ✓ Pink noise background for realism

- ✓ Adjustable carrier and beat frequencies

- ✓ Stereo panning and balance controls

Session Management

- ✓ Pre-programmed frequency protocols

- ✓ Customizable session durations

- ✓ Progress tracking and session history

- ✓ Exportable session data

Educational Content

- ✓ Brainwave science explanations

- ✓ Hemi‑Sync history and research

- ✓ Consciousness state descriptions

- ✓ Safety guidelines and best practices

Safety & Guidelines

Starship Life Support Simulation

The Starship Life Support Simulation models a comprehensive three-layer architecture for sustaining human life during interstellar journeys spanning decades or centuries. This system integrates targeted temperature management, fluid-based rehabilitation, and biological support systems to address both physiological and psychological challenges of deep space travel.

SIMULATION ACTIVE · Three-layer human sustainability architecture · Real-time system monitoring

Starship Life Support · 2026‑01‑12

Simulation Educational Context

- No "Melanon": No known substance allows consciousness at cryogenic temperatures.

- Temperature Reality: 70°C is fatal. This simulation uses safe metabolic suppression ranges (32-37°C).

- Saline vs. Cryo: Saline tanks are for rehabilitation (body temp), not freezing (-100°C).

Layer 1: Primary Hibernation System

Technology & Mechanism

- ✓ Targeted Temperature Management (TTM) with Pharmacological Torpor

- ✓ Induces mild hypothermia (32-34°C) combined with metabolic suppression drugs

- ✓ Achieves ~90% metabolic reduction during cruise phase

- ✓ Reduces psychological strain and resource consumption

Simulation Controls

Layer 2: Fluid-Based Rehabilitation (FTUs)

Technology & Environment

- ✓ Advanced Floatation Therapy Units (FTUs)

- ✓ Epsom salt-saturated water (density ~1.25 g/cm³) at 34-35°C

- ✓ Low-gravity fluid environment for muscle preservation

- ✓ Sensory reduction and mental health maintenance

Primary Functions

- ✓ Pre-Hibernation: 48-hour sensory reduction preparation

- ✓ Post-Hibernation: Graduated muscle reactivation protocols

- ✓ Mental Health: Quarterly "reset" sessions for awake crew

- ✓ Prevention of sensory deprivation psychosis

Layer 3: Biological Support (CECs)

Technology & Purpose

- ✓ Controlled Environment Chambers (CECs)

- ✓ Hydroponic plant cultures for oxygen replenishment

- ✓ Tissue samples and experimental organisms

- ✓ Food sustainability and biological diversity maintenance

System Integration

Why This Architecture Works

1 Risk Distribution

2 Human-Centric Design

3 Progressive Adaptation

System Status Dashboard

Mission Phase Controls

Emergency Protocols

System Failures

- ✓ Hibernation failure → FTU conversion to life support

- ✓ Bio system failure → Stored reserves activation

- ✓ Power failure → Minimal life support prioritization

- ✓ Environmental breach → Emergency pod deployment

Medical Emergencies

- ✓ Crew medical crisis → Medical FTU protocols

- ✓ Psychological crisis → Sensory integration therapy

- ✓ Metabolic imbalance → Nutritional adjustment

- ✓ Infection outbreak → Isolation and treatment

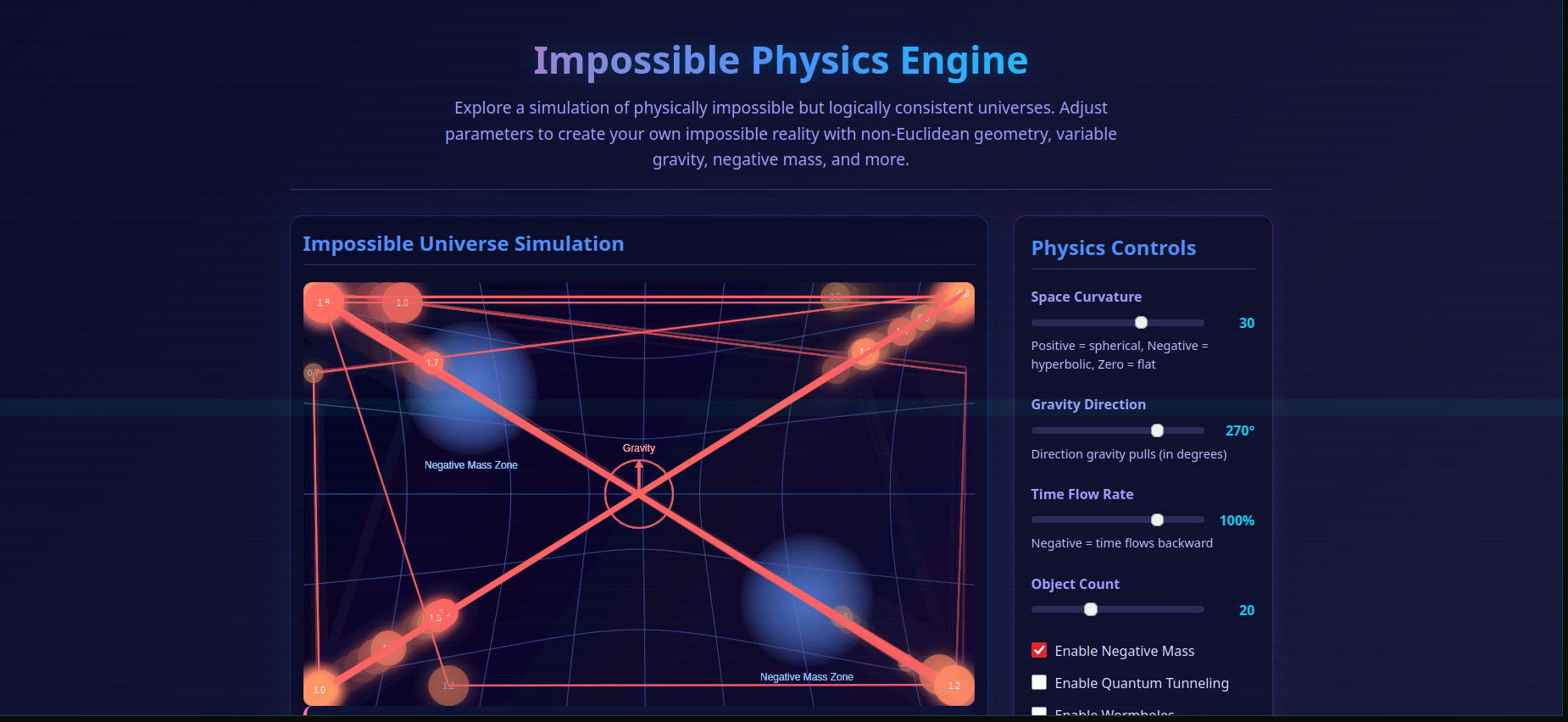

Impossible Physics Engine

The Impossible Physics Engine is an interactive simulation environment that allows you to experiment with physics concepts that violate our universe's laws while maintaining internal logical consistency. This engine enables real-time manipulation of space-time geometry, gravity, mass, and quantum phenomena.

SIMULATION ACTIVE · Real-time physics manipulation with interactive parameter controls

Impossible Physics Engine · 2026‑01‑13

Core Physics Manipulation Features

Non-Euclidean Space Geometry

- ✓ Adjust space curvature from hyperbolic to spherical

- ✓ Interactive grid visualization of space deformation

- ✓ Real-time object trajectory modification

- ✓ Multiple geometry presets for experimentation

Variable Gravity Control

- ✓ Change gravity direction and strength dynamically

- ✓ Multiple gravity sources with individual parameters

- ✓ Negative gravity regions for anti-gravity effects

- ✓ Gradient gravity fields for complex simulations

Negative Mass Regions

- ✓ Blue zones where objects have negative mass

- ✓ Objects repel each other in negative mass regions

- ✓ Configurable mass sign and magnitude

- ✓ Visual indicators for mass polarity

Time Flow Control

- ✓ Speed up, slow down, or reverse time

- ✓ Localized time dilation zones

- ✓ Temporal paradox prevention algorithms

- ✓ Time flow visualization with particle trails

Quantum & Relativistic Effects

Interactive Control Panel

Visualization Features

Object Visualization

- ✓ Color-coded objects based on mass and properties

- ✓ Particle trails showing historical paths

- ✓ Velocity vectors and acceleration indicators

- ✓ Real-time property displays for selected objects

Space Visualization

- ✓ Grid deformation showing space curvature

- ✓ Gravity field lines and potential contours

- ✓ Wormhole visualization with connection paths

- ✓ Quantum probability density clouds

Simulation Capabilities

Educational Applications

Physics Education

- ✓ Understand fundamental physics concepts

- ✓ Visualize abstract mathematical relationships

- ✓ Experiment with "what-if" physics scenarios

- ✓ Develop intuition for complex physical systems

Research & Development

- ✓ Test theoretical physics models

- ✓ Simulate alternative universe physics

- ✓ Develop novel physics visualization techniques

- ✓ Explore computational physics algorithms

Technical Implementation

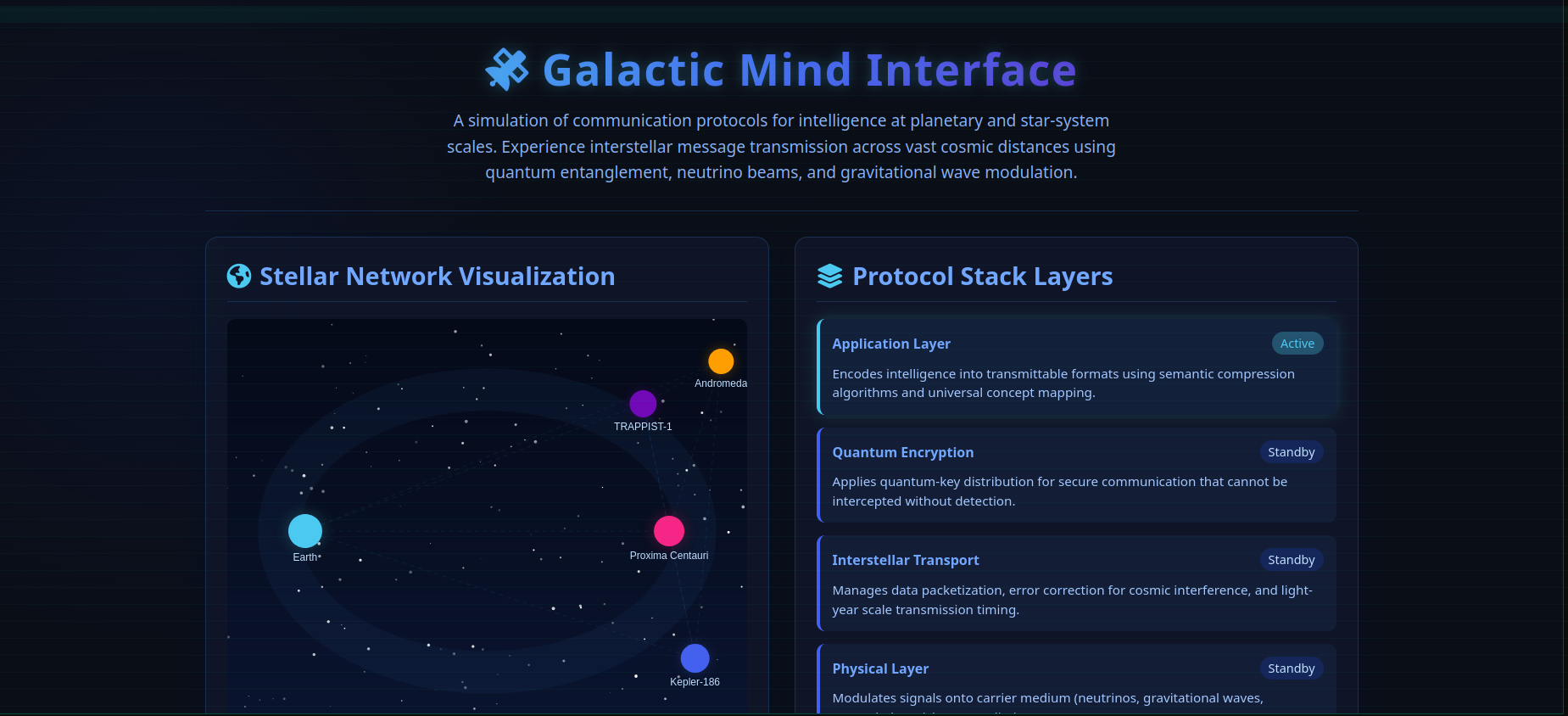

Galactic Mind Interface

The Galactic Mind Interface simulates theoretical interstellar communication systems using multiple physics-based protocols. This interactive visualization demonstrates how civilizations could communicate across cosmic distances using quantum entanglement, neutrino beams, gravitational waves, and electromagnetic signals at planetary and star-system scales.

SIMULATION ACTIVE · Real-time interstellar communication visualization with multiple protocol options

Galactic Mind Interface · 2026‑01‑13

Interactive Communication Protocols

Quantum Entanglement

- ✓ Theoretical instantaneous communication

- ✓ Requires entangled particle pairs at both ends

- ✓ Information transfer through quantum state manipulation

- ✓ No speed-of-light delay (theoretical)

Neutrino Beam

- ✓ Travels at 0.99c (99% light speed)

- ✓ Penetrates through matter with minimal interaction

- ✓ Requires massive detectors for reception

- ✓ Low data rate due to detection challenges

Gravitational Wave

- ✓ Travels at light speed (c)

- ✓ Requires massive energy for generation

- ✓ Passes through matter without scattering

- ✓ Extremely weak signal strength

Electromagnetic Signals

- ✓ Light speed transmission (c)

- ✓ Focused laser for directed communication

- ✓ Radio waves for omnidirectional broadcast

- ✓ Subject to interference and attenuation

Celestial Destination Network

Protocol Stack Visualization

Communication Log Features

Real-time Transmission Log

- ✓ Timestamped transmission activities

- ✓ Protocol selection and activation

- ✓ Destination distance calculations

- ✓ Signal strength and quality metrics

System Status Monitoring

- ✓ Connection status indicators

- ✓ Protocol stack activation states

- ✓ Signal visualization animations

- ✓ Error detection and correction logs

Theoretical Physics Applications

Simulation Parameters

Educational Applications

Physics Education

- ✓ Visualize different physics-based communication methods

- ✓ Understand light-speed limitations and theoretical alternatives

- ✓ Explore quantum mechanics applications

- ✓ Learn about relativistic effects on communication

SETI Research

- ✓ Understand challenges in interstellar communication

- ✓ Explore potential alien communication methods

- ✓ Learn about signal detection and interpretation

- ✓ Study cosmic distance and time delay effects

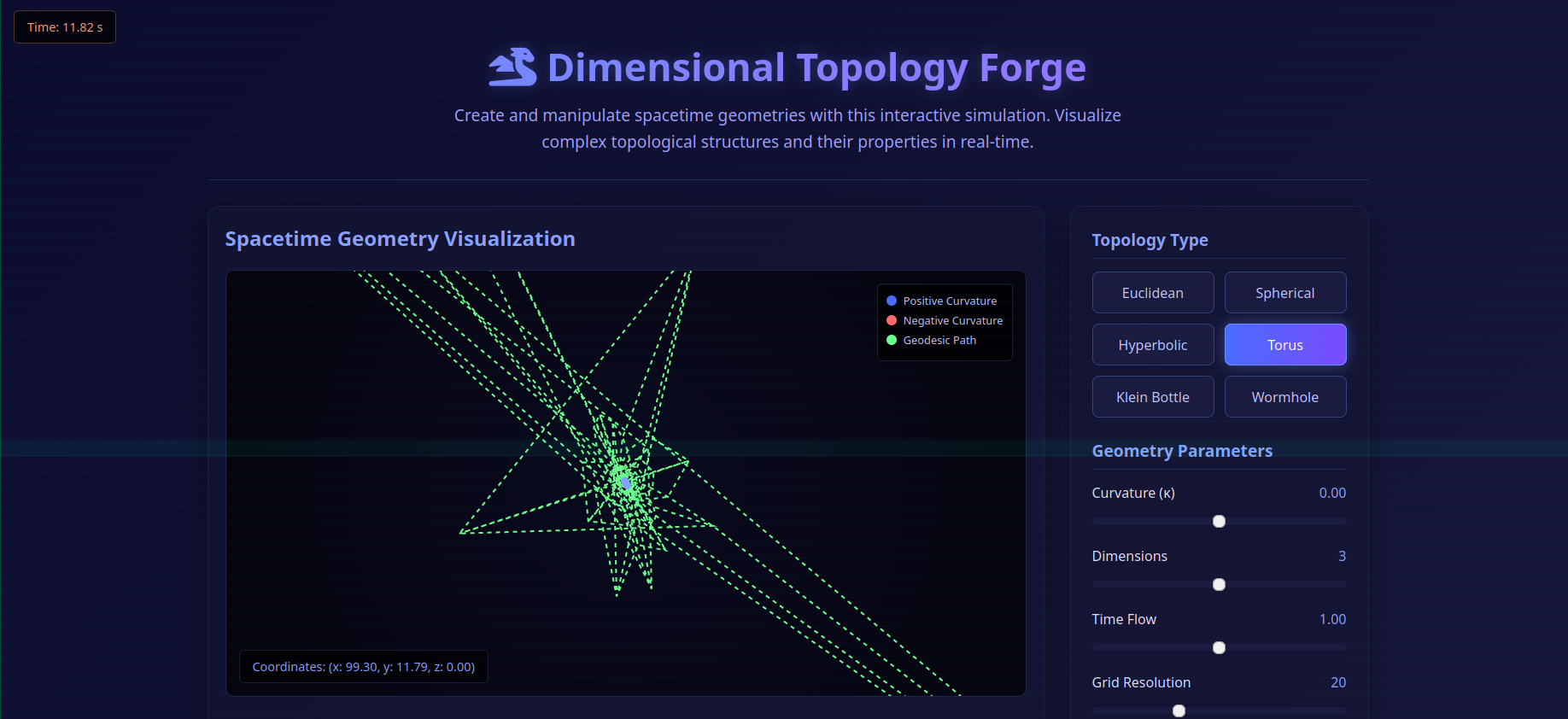

Dimensional Topology Forge

The Dimensional Topology Forge is an interactive visualization engine that allows real-time exploration of different spacetime geometries and dimensional topologies. This simulation enables manipulation of space curvature, dimension count, and geometric properties across multiple theoretical spacetime configurations.

SIMULATION ACTIVE · Real-time spacetime geometry manipulation · Interactive topology visualization

Dimensional Topology Forge · 2026‑01‑13

Features of the Dimensional Topology Forge

Interactive Visualization

- ✓ Real-time rendering of different spacetime geometries

- ✓ Dynamic coordinate transformation based on topology

- ✓ Color-coded curvature visualization

- ✓ Animated transitions between topology types

Multiple Topology Types

- ✓ Euclidean (flat space with zero curvature)

- ✓ Spherical (positive curvature, closed geometry)

- ✓ Hyperbolic (negative curvature, saddle geometry)

- ✓ Torus (donut shape with periodic boundaries)

- ✓ Klein Bottle (non-orientable surface)

- ✓ Wormhole (hyperspace bridge connection)

Interactive Controls

Real-time Information Display

Coordinate Systems

- ✓ Real-time coordinate display (x, y, z, t)

- ✓ Geodesic path calculation visualization

- ✓ Metric tensor component display

- ✓ Parallel transport visualization

Visualization Features

- ✓ Color-coded curvature heat mapping

- ✓ Legend for visual element interpretation

- ✓ Time synchronization indicators

- ✓ Topology-specific visual characteristics

Mathematical Foundations

Additional Features

Educational Applications

Mathematics & Physics

- ✓ Visualize abstract topological concepts

- ✓ Understand curvature and dimensional properties

- ✓ Explore non-Euclidean geometries intuitively

- ✓ Study theoretical spacetime configurations

Computer Graphics

- ✓ Study coordinate transformation algorithms

- ✓ Learn 3D rendering techniques

- ✓ Explore real-time visualization methods

- ✓ Understand mathematical surface representation

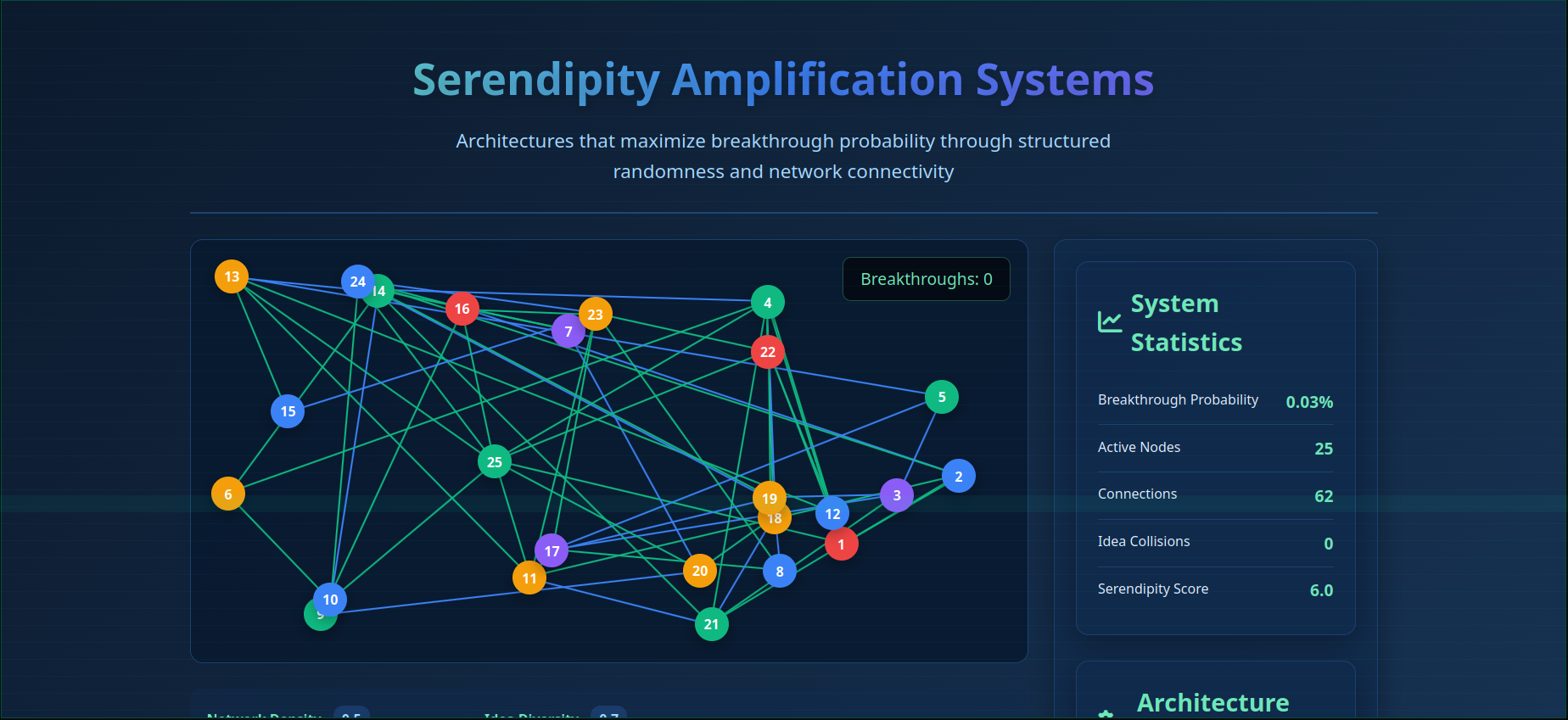

Serendipity Amplification Simulation

The Serendipity Amplification Simulation demonstrates how strategically designed networks can maximize breakthrough discovery probability through controlled connection architectures. This interactive tool visualizes how different parameter combinations influence serendipitous collisions between ideas across diverse knowledge domains.

SIMULATION ACTIVE · Real-time network optimization · Breakthrough probability modeling

Serendipity Amplification Simulation · 2026‑01‑14

Key Features of the Serendipity Amplification Simulation

Interactive Visualization

- ✓ Shows how different architectural parameters affect breakthrough probability

- ✓ Dynamic network visualization with real-time updates

- ✓ Color-coded knowledge domains and connection types

- ✓ Animated breakthrough events when valuable collisions occur

Adjustable Parameters

- ✓ Network Density: Controls how many connections exist between nodes

- ✓ Controlled Randomness: Introduces structured unpredictability

- ✓ Idea Diversity: Determines how different domains interact

- ✓ Collision Rate: Controls how often ideas intersect

Real-time Statistics & Tracking

Visual Elements

Network Representation

- ✓ Color-coded nodes representing different knowledge domains

- ✓ Blue connections for same-domain interactions

- ✓ Green connections for cross-domain interactions

- ✓ Node size indicates activity level and connection density

Event Visualization

- ✓ Breakthrough events that appear when valuable collisions occur

- ✓ Animated particle effects for significant discoveries

- ✓ Historical breakthrough timeline display

- ✓ Connection strength visualization through line thickness

Architecture Types

Educational Applications

Simulation Controls

Interactive Features

- ✓ Real-time parameter adjustment sliders

- ✓ Preset architecture configurations

- ✓ Network speed and animation controls

- ✓ Export and save simulation states

Data Analysis

- ✓ Historical breakthrough trend graphs

- ✓ Efficiency metrics and optimization scores

- ✓ Connection pattern visualization

- ✓ Comparative analysis between configurations

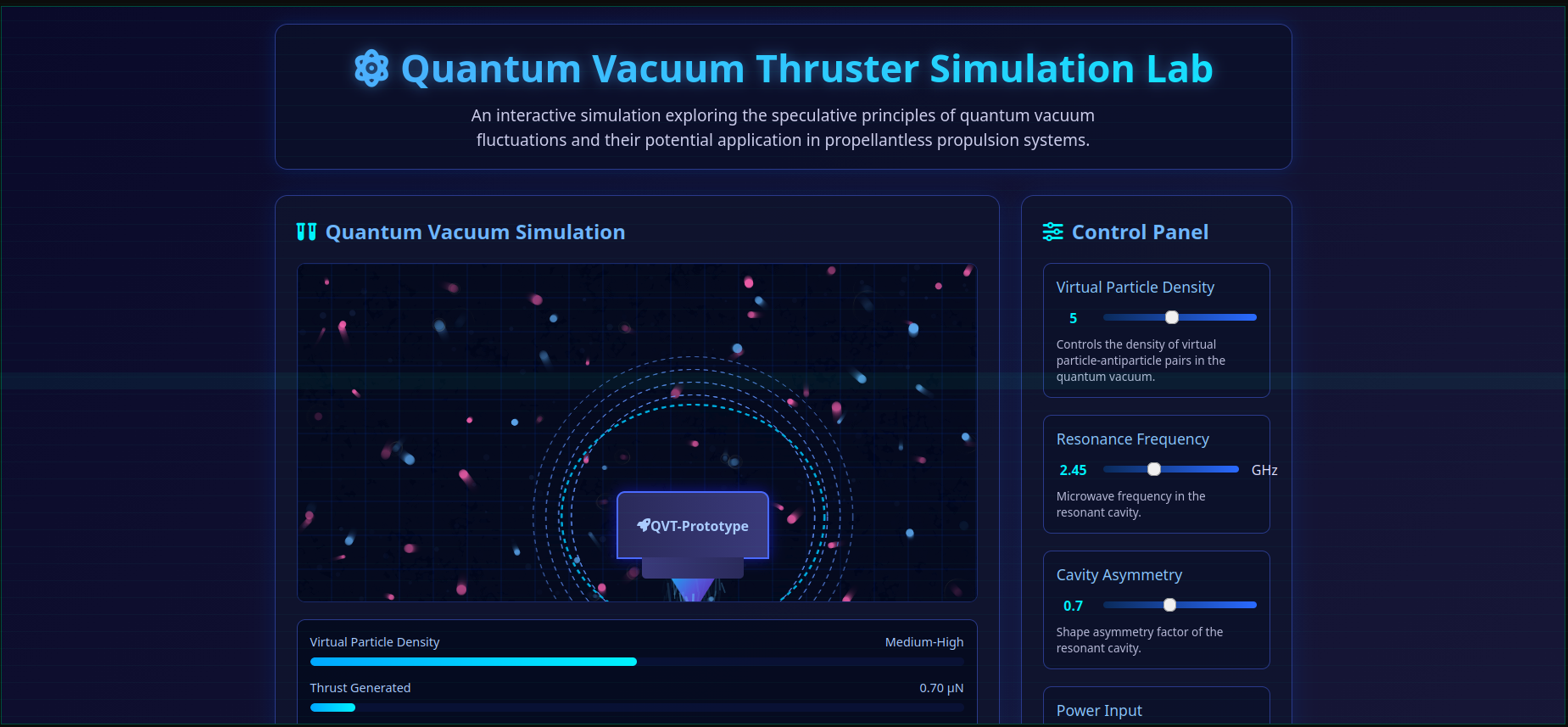

Quantum Vacuum Thruster Simulation Lab

The Quantum Vacuum Thrust Simulation Lab is an interactive visualization tool that demonstrates the principles of the EmDrive and similar controversial propulsion concepts. This simulation models how microwave resonance in an asymmetric cavity might theoretically interact with quantum vacuum fluctuations to produce thrust without traditional propellant.

LAB ACTIVE · Quantum field visualization · Real-time parameter adjustment · Theoretical thrust modeling

Quantum Vacuum Thruster Simulation Lab · 2026‑01‑18

Key Features of the Quantum Vacuum Thruster Simulation Lab

Interactive Visualization

- ✓ Real-time quantum vacuum fluctuation visualization

- ✓ Virtual particle-antiparticle pair generation and annihilation

- ✓ Microwave resonance pattern display in cavity

- ✓ Thruster position response to simulated thrust generation

Control Panel Parameters

- ✓ Virtual Particle Density: Controls quantum fluctuation density

- ✓ Resonance Frequency: Adjusts microwave frequency (1-5 GHz range)

- ✓ Cavity Asymmetry: Changes resonant cavity shape geometry

- ✓ Power Input: Controls electrical power to microwave generator (10-500W)

Real-time Measurements & Readouts

Visual Elements

Quantum Field Representation

- ✓ Virtual particle-antiparticle pairs appearing and annihilating

- ✓ Quantum vacuum background fluctuation visualization

- ✓ Color-coded energy density mapping

- ✓ Time evolution of quantum field states

Thruster & Cavity Visualization

- ✓ 3D asymmetric resonant cavity model

- ✓ Microwave standing wave pattern display

- ✓ Thruster exhaust plume visualization

- ✓ Position tracking based on simulated thrust vector

Theoretical Background Panel

Educational Applications

Simulation Controls

Interactive Features

- ✓ Real-time parameter adjustment sliders

- ✓ Preset experimental configurations

- ✓ Animation speed and detail controls

- ✓ Measurement unit toggles (µN, mN, N)

Data Analysis

- ✓ Thrust vs. Power efficiency graphs

- ✓ Resonance frequency optimization curves

- ✓ Quantum fluctuation statistical analysis

- ✓ Historical experiment data comparison

Quantum Reality Bridge Simulator

The Quantum Reality Bridge Simulator extends quantum field manipulation into the realm of multiverse theory. This advanced module visualizes how varying fundamental physics parameters could create alternate realities, and simulates the theoretical processes required to establish quantum bridges between them.

REALITY BRIDGE ACTIVE · Multiverse visualization · Physics parameter control · Quantum coherence monitoring

Quantum Reality Bridge Simulator · 2026‑01‑21

Interactive Reality Viewer Components

Visual Reality Representation

- ✓ Dynamic dimensional grid visualization (2D to 4D+)

- ✓ Quantum particle effects specific to each reality

- ✓ Real-time coherence field energy patterns

- ✓ Color-coded reality signatures based on physics parameters

Dimensional Visualization

- ✓ Fractal patterns for fractional dimensions (2.8D, 3.5D, etc.)

- ✓ Hyper-dimensional projection algorithms

- ✓ Reality boundary visualization

- ✓ Quantum foam representation for high-dimensional realities

Physics Control Panel

Universe Database: Pre-defined Alternate Realities

Quantum Operations Console

System Status Display

Visual Effects & Animation Systems

Quantum Particle Systems

- ✓ Animated quantum particles in background field

- ✓ Dimension-specific movement patterns

- ✓ Color gradients based on energy levels

- ✓ Particle density tied to entropy levels

Visual Pattern Generation

- ✓ Fractal algorithms for fractional dimensions

- ✓ Crystal lattice patterns for ordered realities

- ✓ Entropy-based noise and distortion effects

- ✓ Color schemes dynamically generated from physics parameters

How to read this page

This page is a laboratory sovereignty document — a declaration of research authority, an archive of experimental lineages, and a map of conceptual territories claimed.

From foundational certification to sovereign laboratory status, this evolution represents 452 days of convergent research across quantum multiverses, medical AI, omni-synthesis systems, and experimental interfaces.